Let's deep dive into pretraining and fine-tuning today!

1. What Is Pretraining?

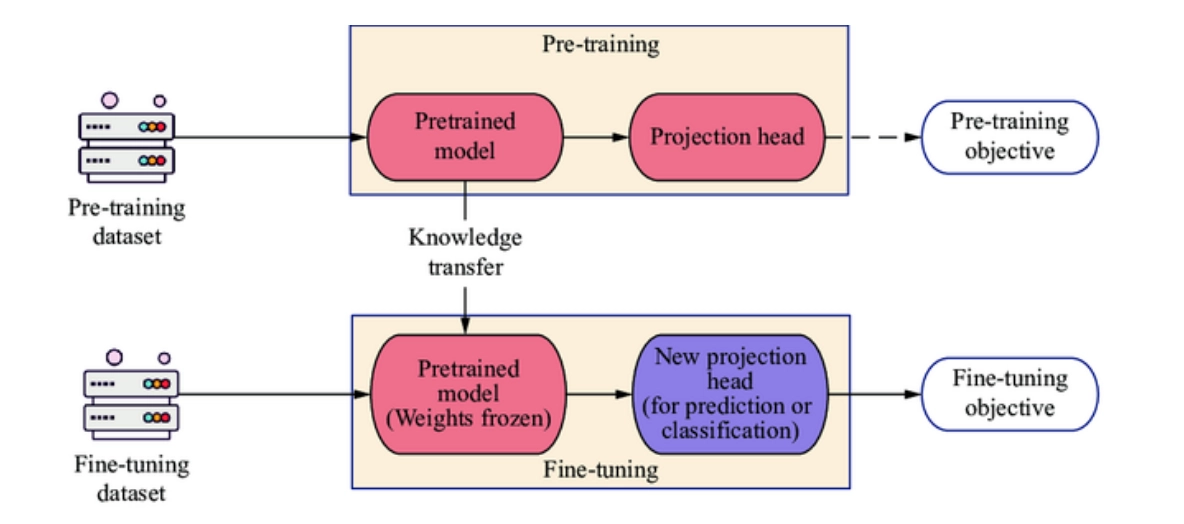

Pretraining is the first step in building AI models. Its goal is to equip the model with general language knowledge. Think of pretraining as “elementary school” for AI, where it learns how to read, understand, and process language using large-scale general datasets (like Wikipedia, books, and news articles). During this phase, the model learns sentence structure, grammar rules, common word relationships, and more.

For example, pretraining tasks might include:

- Masked Language Modeling (MLM):

Input: “John loves ___ and basketball.”

The model predicts: “football.” - Causal Language Modeling (CLM):

Input: “The weather is great, I want to go to”

The model predicts: “the park.”

Through this process, the model develops a foundational understanding of language.

2. What Is Fine-Tuning?

Fine-tuning builds on top of a pretrained model by training it on task-specific data to specialize in a particular area. Think of it as “college” for AI—it narrows the focus and develops expertise in specific domains. It uses smaller, targeted datasets to optimize the model for specialized tasks (e.g., sentiment analysis, medical diagnosis, or legal document drafting).

For example:

- To fine-tune a model for legal document generation, you would train it on a dataset of contracts and legal texts.

- To fine-tune a model for customer service, you would use your company’s FAQ logs.

Fine-tuning enables AI to excel at specific tasks without needing to start from scratch.

3. Key Differences Between Pretraining and Fine-Tuning

While both processes aim to improve AI’s capabilities, they differ fundamentally in purpose and execution:

| Aspect | Pretraining | Fine-Tuning |

|---|---|---|

| Purpose | To learn general language knowledge, including vocabulary, syntax, and semantic relationships. | To adapt the model for specific tasks or domains. |

| Data Source | Large-scale, general datasets (e.g., Wikipedia, books, news). | Domain-specific, smaller datasets (e.g., medical records, legal texts, customer FAQs). |

| Time and Cost | Time-consuming and computationally expensive, requiring extensive GPU/TPU resources. | Quicker and less resource-intensive. |

| Use Case | Provides foundational language capabilities for a wide range of applications. | Enables custom applications, like translation, sentiment analysis, or text summarization. |

| Parameter Update | Fully updates model parameters from scratch. | Makes targeted adjustments to the pretrained model’s parameters. |

In short: Pretraining builds the “brain” of AI, while fine-tuning teaches it specific “skills.”

4. Why Separate Pretraining and Fine-Tuning?

(1) Efficiency

Pretraining requires vast amounts of data and computational power. For instance, GPT-3’s pretraining cost millions of dollars in GPU time. Fine-tuning, however, can achieve impressive results with a smaller dataset and less computational effort.

(2) General vs. Specific Knowledge

Pretrained models are designed for general-purpose tasks, while fine-tuning tailors these models for specific use cases, expanding their utility.

(3) Reusability

A single pretrained model can be fine-tuned for various domains, such as legal, medical, or educational applications. This modularity reduces redundancy and speeds up AI development.

5. Real-Life Applications

Case 1: Chatbots

- Pretrained model: Understands general conversational language, like greetings or small talk.

- Fine-tuned model: Learns how to answer domain-specific questions, such as those related to product returns.

Case 2: Legal Document Generation

- Pretrained model: Recognizes general language patterns and logical structures.

- Fine-tuned model: Can generate contracts and ensure legal compliance using domain-specific datasets.

Case 3: Medical Diagnosis

- Pretrained model: Understands basic language and context relationships.

- Fine-tuned model: Analyzes medical records and generates insights specific to healthcare.

6. The Future

As AI models grow in size and capability (e.g., GPT-4 and beyond), techniques like Few-Shot Learning and Zero-Shot Learning are reducing dependence on fine-tuning for some tasks. However, for highly specialized use cases, fine-tuning remains indispensable.

Trends to watch:

- Stronger pretrained models: Increasingly capable of handling a broader range of tasks out of the box.

- Simplified fine-tuning tools: Making it easier for businesses and individuals to customize AI models.

7. One-Line Summary

Pretraining is the “basic education” that equips AI with foundational knowledge, while fine-tuning is the “advanced training” that makes it an expert in specific domains. Together, they are the backbone of modern AI capabilities.

Final Thoughts

Next time you interact with AI, remember the two phases behind its smarts: Pretraining to make it a “generalist” and fine-tuning to transform it into a “specialist.” Stay tuned for more AI insights tomorrow—follow me to explore the magic of AI!

At the end, feel free to check out my other AI Insights blog posts at here.