Ray Serve is a cutting-edge model serving library built on the Ray framework, designed to simplify and scale AI model deployment. Whether you’re chaining models in sequence, running them in parallel, or dynamically routing requests, Ray Serve excels at handling complex, distributed inference pipelines. Unlike Ollama or FastAPI, it combines ease of use with powerful scaling, multi-model management, and Pythonic APIs. In this post, we’ll explore how Ray Serve compares to other solutions and why it stands out for large-scale, multi-node AI serving.

Before Introducing Ray Serve, We Need to Understand Ray

What is Ray?

Ray is an open-source distributed computing framework that provides the core tools and components for building and running distributed applications. Its goal is to enable developers to easily scale single-machine programs to distributed environments, supporting high-performance tasks such as distributed model training, large-scale data processing, and distributed inference.

Core Modules of Ray

- Ray Core

- The foundation of Ray, providing distributed scheduling, task execution, and resource management.

- Allows Python functions to be seamlessly transformed into distributed tasks using the

@ray.remotedecorator. - Ideal for distributed data processing and computation-intensive workloads.

- Ray Libraries

- Built on top of Ray Core, these are specialized tools designed for specific tasks. Examples include:

- Ray Tune: For hyperparameter search and experiment optimization.

- Ray Train: For distributed model training.

- Ray Serve: For distributed model serving.

- Ray Data: For large-scale data and stream processing.

- Built on top of Ray Core, these are specialized tools designed for specific tasks. Examples include:

In simpler terms, Ray Core is the underlying engine, while the various tools (like Ray Serve) are specific modules built on top of it to handle specific functionalities.

Now Let’s Talk About Ray Serve...

Many people ask:

“Is Ray Serve just a backend service that routes user requests to an LLM (Large Language Model) and returns the results?”

You’re half right! Ray Serve does exactly that, but it’s much more than just a “delivery boy.” Compared to a basic FastAPI backend or a dedicated tool like Ollama, Ray Serve is a flexible, capable, and self-scaling assistant that handles much more than just routing.

Let’s dive in and break down what Ray Serve does, and how it compares to Ollama or a custom-built FastAPI solution.

Ray Serve: The Versatile Multi-Tasker of Model Serving

In short, Ray Serve’s mission is to: “Handle user requests, route them to the right model for processing, optimize resources, and dynamically scale as needed.”

It’s like a supercharged scheduler that performs the following key tasks:

- Setting up model services: You tell it where your model is (e.g., a GPT-4 instance), and it will automatically handle receiving requests, sending inference tasks, and even batching requests.

- Managing traffic spikes: When user requests flood in like a tidal wave, it dynamically scales instances to handle the pressure.

- Supporting multiple models: With Ray Serve, you can host multiple models in a single service (e.g., one for text generation and another for spam classification) without any issues.

So no, Ray Serve isn’t just “doing the grunt work”—it also adjusts the architecture, adds new resources, and patches itself when needed.

Application Patterns of Ray Serve: Adapting to Multi-Model and Multi-Step Inference

The diagrams in this sections are from https://www.anyscale.com/glossary/what-is-ray-serve.

In modern AI systems, multi-model and multi-step inference has become a common requirement. Whether you’re processing images, text, or multi-modal inputs, model services need to support flexible inference patterns. Ray Serve excels here by seamlessly adapting to the following three classic patterns, offering simple and efficient Pythonic APIs to minimize configuration complexity.

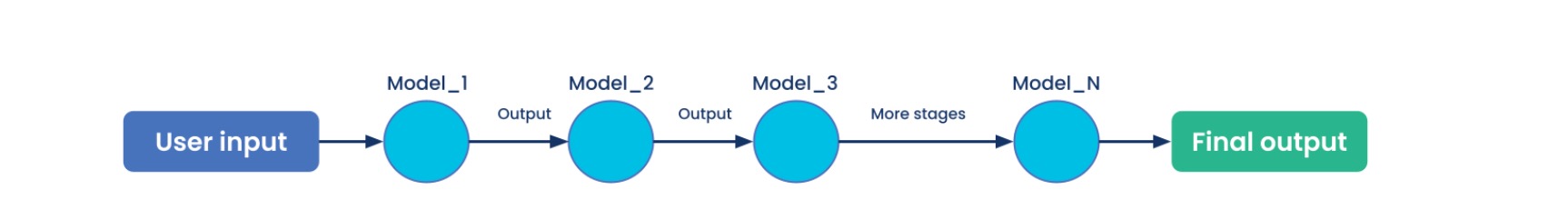

Pattern 1: Sequential Model Inference (Chaining Models in Sequence)

In this pattern, user input passes through multiple models sequentially, with each model’s output serving as the input for the next. This chained structure is common in tasks like image processing or data transformations.

Example:

For an image enhancement task, the input might go through a denoising model (Model_1), followed by a feature extraction model (Model_2), and finally a classification model (Model_3).

Advantages of Ray Serve:

- Efficient Communication: Data is passed between models using shared memory, reducing overhead.

- Flexible Scheduling: Resources are dynamically allocated to ensure stable and efficient inference pipelines.

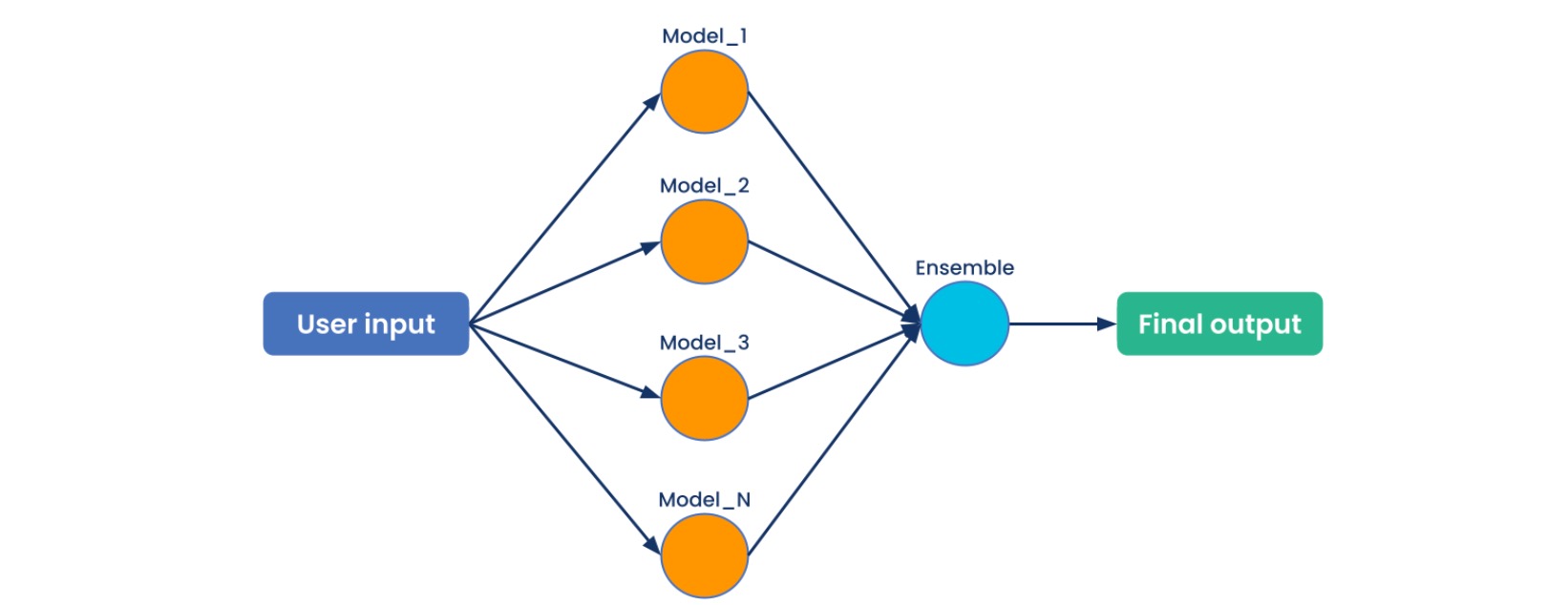

Pattern 2: Parallel Model Inference (Ensembling Models)

Explanation:

In this pattern, user input is sent to multiple models simultaneously, with each model processing the request independently. The results are then aggregated by an ensemble step to produce the final output. This pattern is often used in recommendation systems or ensemble learning, where outputs from multiple models are combined for decision-making.

Example:

A recommendation system might use collaborative filtering (Model_1), a deep learning model (Model_2), and a rule-based model (Model_3) to make predictions, then select the best recommendation based on business logic.

Advantages of Ray Serve:

- Flexible Routing Mechanism: Easily configure multiple model endpoints for parallel processing.

- High-Concurrency Handling: Ray’s distributed architecture efficiently manages high-load scenarios with multiple models.

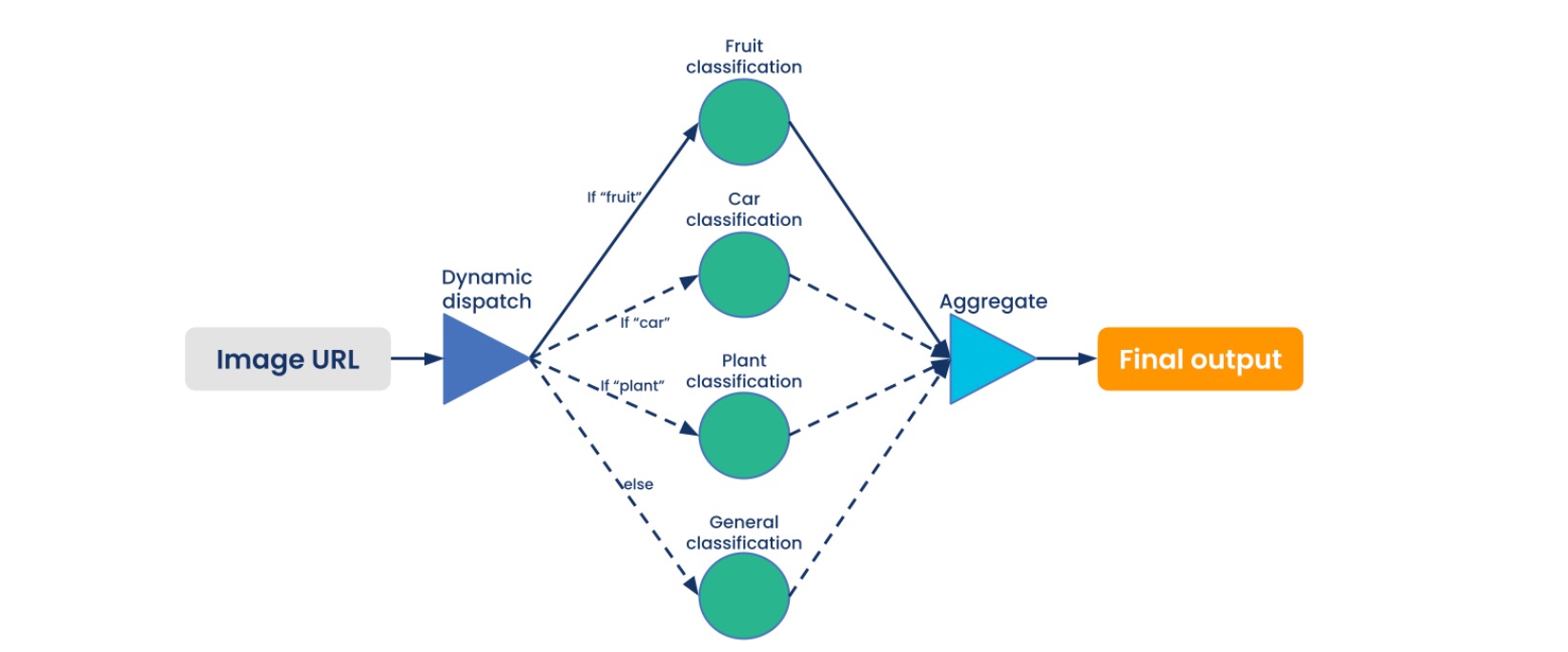

Pattern 3: Dynamic Model Dispatching (Dynamic Dispatching to Models)

Explanation:

Here, models are dynamically selected based on the input’s characteristics, ensuring that only the necessary models are triggered for inference. This is ideal for scenarios with complex classification tasks or diverse model types.

Example:

In an image classification system, depending on the input image (e.g., a fruit, car, or plant), a specialized model is dynamically chosen for inference instead of invoking every model in the pipeline.

Advantages of Ray Serve:

- Resource Efficiency: Only the required models are triggered, avoiding unnecessary computation.

- Flexible Business Logic: Dynamic routing rules can be easily defined with simple Python code, eliminating the need for complex YAML configurations.

Unique Advantages of Ray Serve

Compared to other model-serving frameworks like TensorFlow Serving or NVIDIA Triton, Ray Serve offers unique advantages for multi-step and multi-model inference scenarios:

- Dynamic Scheduling: Adjust resources and routing strategies based on workload requirements.

- Efficient Communication: Optimize data transfer between models using shared memory to reduce overhead.

- Granular Resource Allocation: Assign fractional CPU or GPU resources to model instances, improving utilization.

- Pythonic API: Simplify implementation with intuitive Python interfaces, avoiding complex YAML setups.

Ollama vs. Ray Serve vs. Custom FastAPI: A Comparison

1. Ollama: The Lightweight Assistant for LLMs

Ollama is designed to quickly set up local LLM services like LLaMA or other open-source models.

Strengths:

- Plug-and-Play Simplicity: Minimal configuration required.

- LLM-Focused: Optimized for large language models with offline deployment support.

Weaknesses:

- Limited Flexibility: Restricted to LLMs and lacks support for multi-model management.

- Scalability Concerns: Not ideal for high-concurrency or distributed deployments.

2. Custom FastAPI: The DIY Player for Enthusiasts

FastAPI is a flexible web framework for building lightweight APIs, including ones that interface with backend models.

Strengths:

- Full Customization: You have complete control over the logic and routing.

- Lightweight: Ideal for small-scale projects.

Weaknesses:

- Manual Scaling: Requires hand-crafted solutions for scaling and multi-model management.

- Complex Distributed Deployments: Needs additional tools like Kubernetes for distributed setups.

3. Ray Serve: The Smart Manager

Ray Serve combines Ollama’s simplicity with FastAPI’s flexibility, adding powerful distributed capabilities.

Strengths:

- Multi-Model Support: Host multiple models simultaneously.

- Dynamic Scaling: Automatically adjust resources based on traffic.

- Distributed Deployment: Handles multi-node clusters effortlessly.

- Batching Optimization: Combines multiple requests for efficient processing.

Weaknesses:

- Learning Curve: Configuration is more complex than FastAPI.

- Ray Dependency: May feel like overkill for single-node setups.

Choosing the Right Tool

| Feature/Framework | Ray Serve | Ollama | FastAPI |

|---|---|---|---|

| Multi-Model Support | Strong | Moderate | Weak |

| Distributed Deployment | Yes | No | No |

| Dynamic Scaling | Yes | No | No |

| Learning Curve | Moderate | Low | Low |

| Best For | Large-scale distributed projects, complex model serving | Quick local LLM deployment | Small projects, API customization |

Conclusion

If you just want a quick, local LLM deployment, go with Ollama.

For flexible API development, FastAPI is your best choice.

If you need multi-model management, dynamic scaling, or distributed deployment, Ray Serve is the ultimate solution.

Ray Serve acts as the "smart manager" of backend services, effortlessly handling both single-node and multi-node deployments. Stay tuned for a deeper dive into how Ray Serve dynamically adjusts resources based on traffic!