1. What Are Parameters?

In deep learning, parameters are the trainable components of a model, such as weights and biases, which determine how the model responds to input data. These parameters adjust during training to minimize errors and optimize the model's performance. Parameter count refers to the total number of such weights and biases in a model.

Think of parameters as the “brain capacity” of an AI model. The more parameters it has, the more information it can store and process.

For example:

- A simple linear regression model might only have a few parameters, such as weights (

) and a bias (

).

- GPT-3, a massive language model, boasts 175 billion parameters, requiring immense computational resources and data to train.

2. The Relationship Between Parameter Count and Model Performance

In deep learning, there is often a positive correlation between a model's parameter count and its performance. This phenomenon is summarized by Scaling Laws, which show that as parameters, data, and computational resources increase, so does the model's ability to perform complex tasks.

Why Are Bigger Models Often Smarter?

- Higher Expressive Power

Larger models can capture more complex patterns and features in data. For instance, they not only grasp basic grammar but also understand deep semantic and contextual nuances. - Stronger Generalization

With sufficient training data, larger models generalize better to unseen scenarios, such as answering novel questions or reasoning about unfamiliar topics. - Versatility

Bigger models can handle multiple tasks with minimal or no additional training. For example, OpenAI's GPT models excel in creative writing, code generation, translation, and logical reasoning.

However, bigger isn’t always better. If the parameter count exceeds the amount of data or the complexity of the task, the model may become overly complex and prone to overfitting.

3. The Practical Significance of Parameter Count

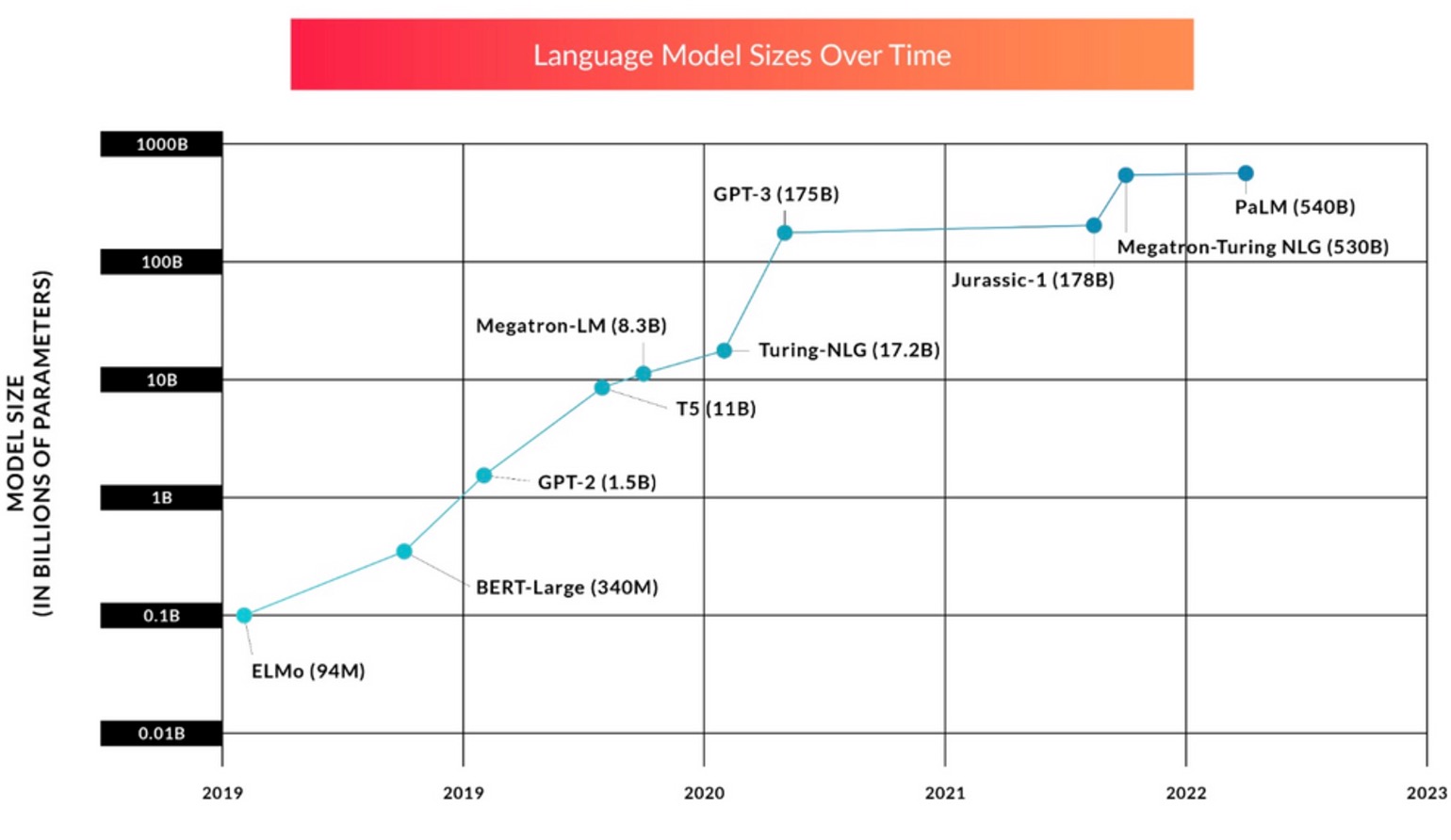

Language Models at Scale

Here’s a comparison of parameter counts for well-known models:

- GPT-2: 1.5 billion parameters

- GPT-3: 175 billion parameters

- GPT-4: 1.7 trillion parameters.

As parameter counts grow, these models have demonstrated remarkable improvements in:

- Text fluency: Generating coherent and contextually appropriate responses.

- Reasoning: Solving logical puzzles or providing detailed explanations.

- Creativity: Writing essays, poetry, and even code snippets.

In Computer Vision

Parameter count is equally significant in image recognition. For instance:

- ResNet: Early versions had a few million parameters.

- Vision Transformers (ViT): These modern architectures often have hundreds of millions of parameters, enabling them to outperform traditional convolutional networks on complex tasks.

4. Are Bigger Models Always Better?

Advantages of Large Models

- Capture Complex Patterns: They can model intricate relationships in data that smaller models might miss.

- Task Versatility: One large model can handle diverse tasks without needing significant fine-tuning.

- Breakthroughs in Performance: Larger models often lead to state-of-the-art results across many benchmarks.

Drawbacks of Large Models

- High Computational Cost: Bigger models require immense resources for both training and inference. For example, training GPT-3 reportedly cost millions of dollars in compute time.

- Energy Consumption: Training large models has a significant environmental impact, as it demands enormous amounts of energy.

- Efficiency Issues: For certain tasks, smaller, task-specific models may achieve similar results with far fewer resources.

As a result, choosing the right model size involves balancing performance gains against computational efficiency.

5. Trends in Parameter Optimization: Big Models vs. Small Models

Despite the success of large models, recent trends highlight the growing importance of efficient AI:

- Parameter Compression: Techniques like knowledge distillation and model pruning extract the most valuable knowledge from large models and condense it into smaller, faster models.

- Efficient Inference: Lightweight models, such as DistilBERT, are designed for mobile devices and embedded systems, making AI more accessible and sustainable.

- Task-Specific Optimization: Instead of using a massive model for every problem, fine-tuning smaller models for specific tasks often yields better cost-effectiveness.

The likely future of AI involves large-scale pretraining paired with smaller, fine-tuned deployments, combining the strengths of both approaches.

6. One-Line Summary

Parameter count represents the "brain capacity" of an AI model. While larger models often excel at complex tasks, balancing size and efficiency is key to sustainable AI development.

Your Thoughts

Do you think the race for larger models is sustainable, or should the focus shift toward efficiency and accessibility? Share your perspective in the comments below!