Knowledge Distillation in AI is a powerful method where large models (teacher models) transfer their knowledge to smaller, efficient models (student models). This technique enables AI to retain high performance while reducing computational costs, speeding up inference, and facilitating deployment on resource-constrained devices like mobile phones and edge systems. By mimicking the outputs of teacher models, student models deliver lightweight, optimized solutions ideal for real-world applications. Let’s explore how knowledge distillation works and why it’s transforming modern AI.

1. What Is Knowledge Distillation?

Knowledge distillation is a technique where a large model (Teacher Model) transfers its knowledge to a smaller model (Student Model). The goal is to compress the large model’s capabilities into a lightweight version that is faster, more efficient, and easier to deploy, while retaining high performance.

Think of a teacher (large model) simplifying complex ideas for a student (small model). The teacher provides not just the answers but also insights into how the answers were derived, allowing the student to replicate the process efficiently.

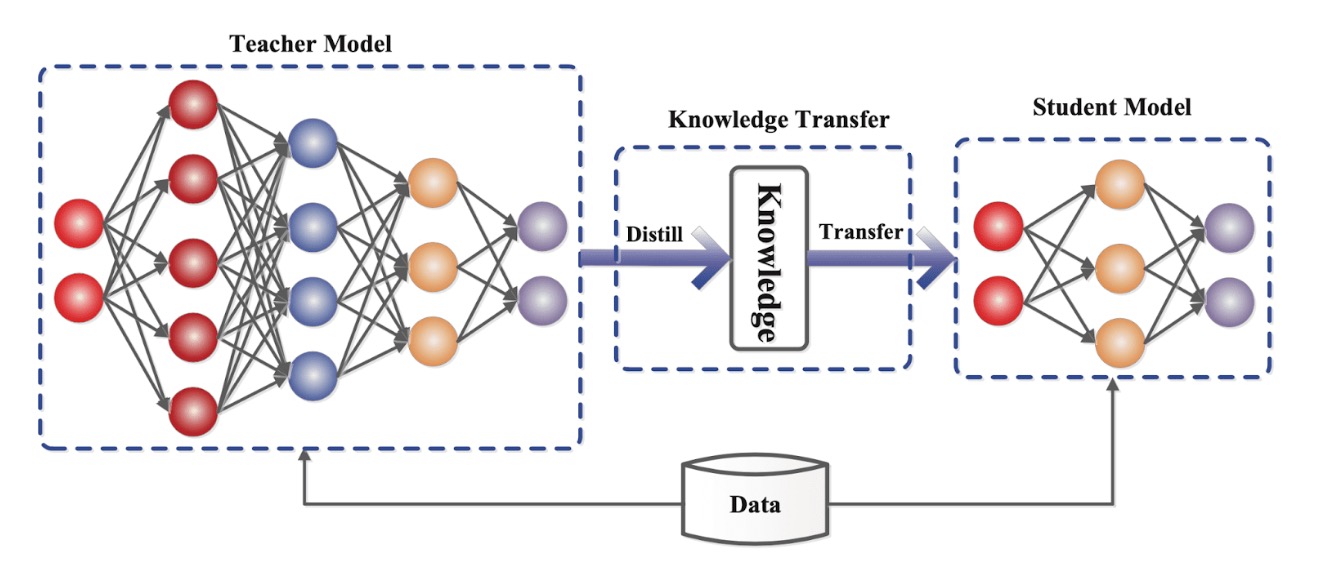

The illustration from Knowledge Distillation: A Survey explained it:

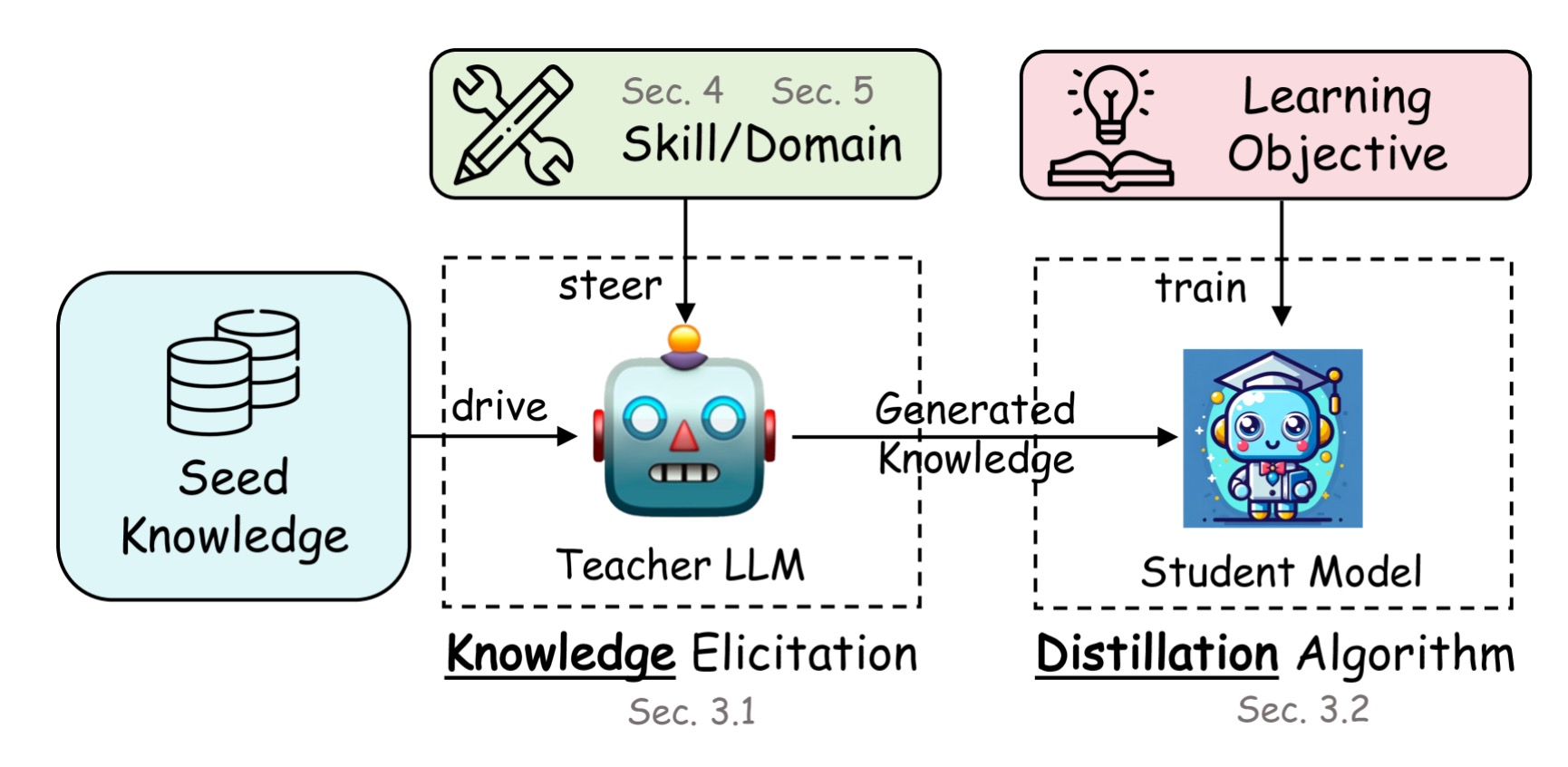

Another figure is from A Survey on Knowledge Distillation of Large Language Models:

2. Why Is Knowledge Distillation Important?

Large models (e.g., GPT-4) are powerful but have significant limitations:

- High Computational Costs: Require expensive hardware and energy to run.

- Deployment Challenges: Difficult to use on mobile devices or edge systems.

- Slow Inference: Unsuitable for real-time applications like voice assistants.

Knowledge distillation helps address these issues by:

- Reducing Model Size: Smaller models require fewer resources.

- Improving Speed: Faster inference makes them ideal for resource-constrained environments.

- Maintaining Accuracy: By learning from large models, smaller models can achieve comparable performance.

3. How Does Knowledge Distillation Work?

The process involves several key steps:

(1) Train the Teacher Model

A large model is trained on a comprehensive dataset to achieve high accuracy and generalization.

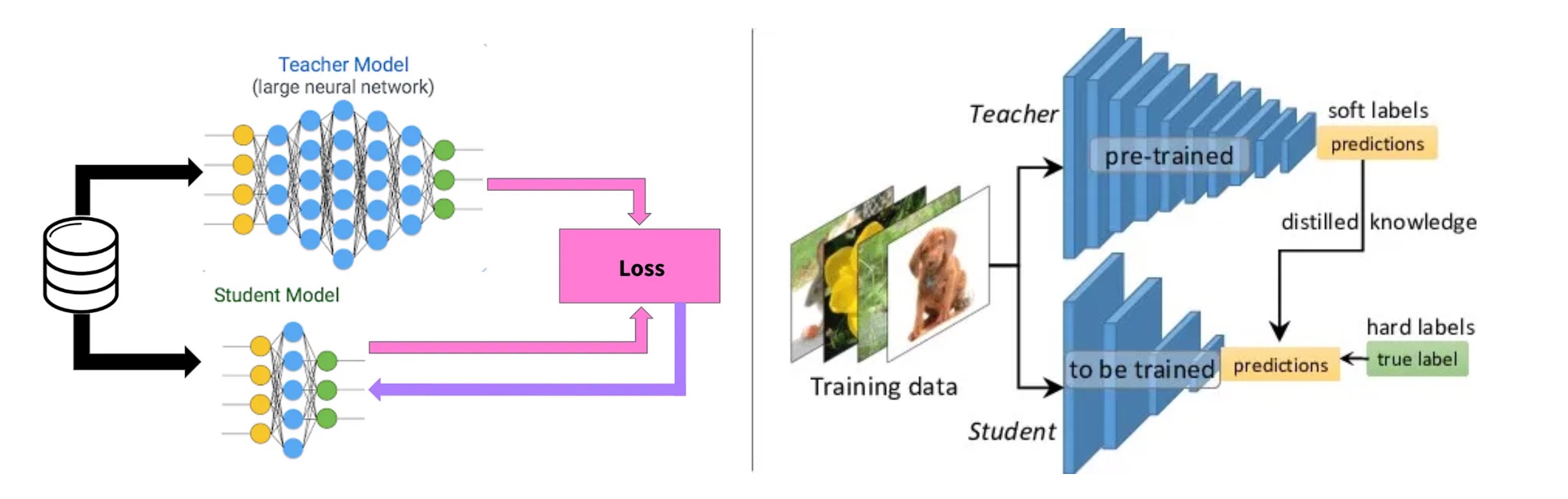

(2) Generate Soft Targets

- The teacher model produces outputs with detailed probability distributions.

- For example, when classifying an image, instead of just saying “cat,” the teacher might output:

- Cat: 80%

- Dog: 15%

- Fox: 5%.

- These soft targets provide rich information about how the teacher distinguishes between categories.

(3) Train the Student Model

- The smaller model learns from both the teacher’s soft targets and the original data.

- By mimicking the teacher’s outputs, the student absorbs the distilled knowledge without requiring as much capacity.

(4) Evaluate and Optimize

The student model’s performance is validated and fine-tuned to ensure it meets the desired accuracy and efficiency.

4. A Simple Example: The Classroom Analogy

- Without Distillation: A small model learns directly from raw data, like a student relying solely on a textbook without guidance.

- With Distillation: The teacher (large model) explains not only the answers but also why certain conclusions are drawn. The student absorbs these nuanced insights, leading to better understanding.

Probabaly below figure from Knowledge Distillation : Simplified can help:

5. Real-World Applications of Knowledge Distillation

(1) Lightweight AI on Edge Devices

- Small, distilled models are deployed on smartphones, IoT devices, and embedded systems.

- Example: A distilled CLIP model for image classification on mobile.

(2) Real-Time Applications

- Faster inference is crucial for speech recognition or recommendation systems in real-time scenarios.

- Example: Voice assistants using distilled models for quick responses.

(3) Multitask Learning

- Combine multiple teacher models into one small model capable of handling various tasks.

- Example: A single model for both translation and sentiment analysis.

6. Challenges in Knowledge Distillation

(1) Knowledge Loss

Small models may fail to replicate the full depth of understanding from large models, especially for complex tasks.

(2) Computational Overhead

Generating soft targets from a teacher model can be resource-intensive when working with large datasets.

(3) Task-Specific Needs

Different tasks require different knowledge. Adapting distilled models to specific tasks remains a research challenge.

7. One-Line Summary

Knowledge distillation compresses the “wisdom” of large models into smaller, efficient ones, enabling faster, cost-effective AI without sacrificing accuracy.

Final Thoughts

Knowledge distillation bridges the gap between large, powerful models and real-world deployment. By making AI both smarter and leaner, this technique is transforming applications from edge devices to real-time systems. Next time you use a quick AI assistant on your phone, think about the distilled knowledge powering it. Stay tuned for more insights tomorrow!