Hello, and welcome to the first edition of Daily AI Insight, a series dedicated to unraveling the fascinating world of artificial intelligence, one bite-sized topic at a time.

Daily AI Insight aims to bridge that gap. Every post will break down a key concept, a research trend, or a real-world application of AI into digestible, easy-to-understand insights. Whether you're an AI enthusiast, a professional looking to integrate AI into your work, or just curious about what all the fuss is about, this series is for you.

1. What is the Transformer?

The Transformer is a deep learning architecture introduced by Google Research in 2017 through the seminal paper Attention is All You Need. Originally designed to tackle challenges in natural language processing (NLP), it has since transformed into the foundation for state-of-the-art AI models in multiple domains, such as computer vision, speech processing, and multimodal learning.

Traditional NLP models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory networks) had two significant shortcomings:

- Sequential Processing: These models processed text one token at a time, slowing down computations and making it hard to parallelize.

- Difficulty Capturing Long-Range Dependencies: For long sentences or documents, these models often lost crucial contextual information from earlier parts of the input.

The Transformer introduced a novel Self-Attention Mechanism, enabling it to process entire input sequences simultaneously and focus dynamically on the most relevant parts of the sequence. Think of it like giving the model a panoramic lens, allowing it to view the entire context at once, rather than just focusing on one word at a time.

2. Why is the Transformer Important?

The Transformer brought a paradigm shift to AI, fundamentally altering how models process, understand, and generate information. Here's why it’s considered revolutionary:

(1) Parallel Processing

Unlike RNNs that process data step by step, Transformers can analyze all parts of the input sequence simultaneously. This parallelism significantly speeds up training and inference, making it feasible to train models on massive datasets.

(2) Better Understanding of Context

The Self-Attention Mechanism enables the Transformer to capture relationships between all tokens in a sequence. For example, in the sentence: “Although it was raining, she decided to go for a run,” the word "although" and "decided" are closely related, even though they’re separated by other words. Transformers excel at identifying and using such relationships.

(3) Scalability

The modular architecture of the Transformer makes it easy to scale. This is why the largest AI models today, like OpenAI's GPT series, Google’s BERT, and other LLMs (Large Language Models), all stem from this architecture.

3. How Does the Transformer Work?

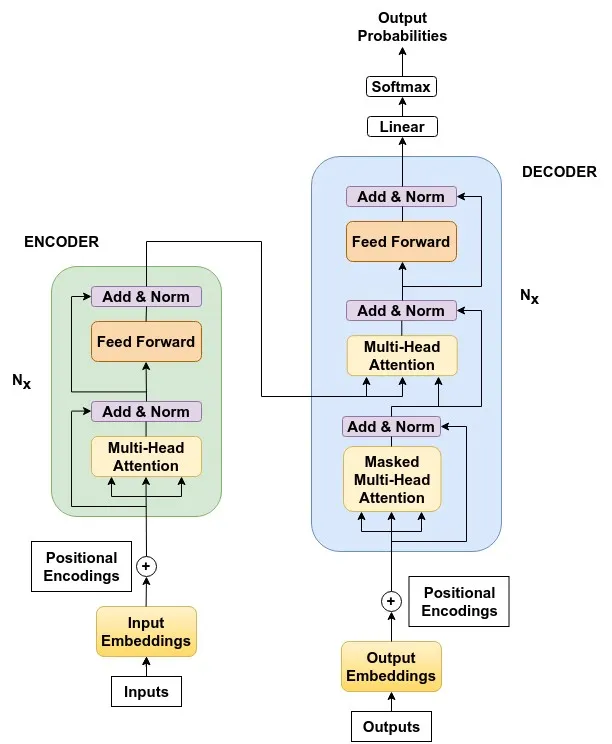

The Transformer is built around two core components: Encoders and Decoders. Here’s how they function:

(1) The Encoder

The encoder processes the input sequence, such as a sentence, and transforms it into a series of rich, context-aware vector representations. For instance, in a translation task, the encoder might analyze the sentence “I love programming” and create numerical embeddings for each word, capturing their meaning and relationships.

(2) The Decoder

The decoder takes the encoder’s output and generates the target sequence. For translation, it might turn the encoded representations into a sentence like “J’aime programmer” in French.

Self-Attention Mechanism in Action

The heart of the Transformer lies in self-attention, which allows the model to compute the importance of each word relative to every other word in a sequence. For instance, when processing “I love programming,” the word “love” has strong ties to “programming,” which the attention mechanism identifies and weighs heavily during computations.

4. Key Applications and Models

The success of the Transformer architecture has led to the development of many groundbreaking AI models across different domains:

(1) Natural Language Processing

- BERT (Bidirectional Encoder Representations from Transformers): A Google model designed for understanding the meaning of text in context, widely used for search engines, question answering, and sentiment analysis.

- GPT Series (Generative Pre-trained Transformers): OpenAI’s series of models, from GPT-2 to GPT-4, excel in text generation, from creative writing to code completion.

(2) Computer Vision

- Vision Transformer (ViT): Adapts the Transformer architecture for image recognition tasks, segmenting an image into patches and applying self-attention to understand relationships between different parts of the image.

(3) Multimodal AI

Models like CLIP and DALL-E use Transformers to handle text and image inputs, enabling AI to generate art from text descriptions or describe images in natural language.

5. Advantages of the Transformer

- Efficiency: Parallel processing dramatically reduces training time.

- Versatility: Adaptable to various tasks beyond NLP, such as computer vision and multimodal applications.

- Scalability: Easy to scale up for training large models on massive datasets.

6. Challenges and Limitations

Despite its advantages, the Transformer is not without drawbacks:

- High Computational Costs: Training Transformers, especially large-scale ones like GPT-4, requires enormous computational resources and specialized hardware like GPUs or TPUs.

- Data-Hungry: Transformers need vast amounts of labeled data for training, making them inaccessible for smaller organizations or domains with limited data availability.

- Lack of Interpretability: While the self-attention mechanism provides flexibility, the inner workings of Transformers remain a “black box,” posing challenges for applications like healthcare and legal systems where decisions need to be transparent.

7. Transformative Impact

The Transformer has reshaped AI research and applications, enabling breakthroughs in natural language understanding, image recognition, and generative AI. It’s the foundation for innovations like ChatGPT, automated translation, and content creation tools.

8. One-Line Summary

The Transformer revolutionized AI with its self-attention mechanism and scalability, making it the cornerstone of modern AI and driving advancements across multiple domains.