Today I started with Choosing the learning rate, reviewed the Jupyter lab and learnt what is feature engineering.

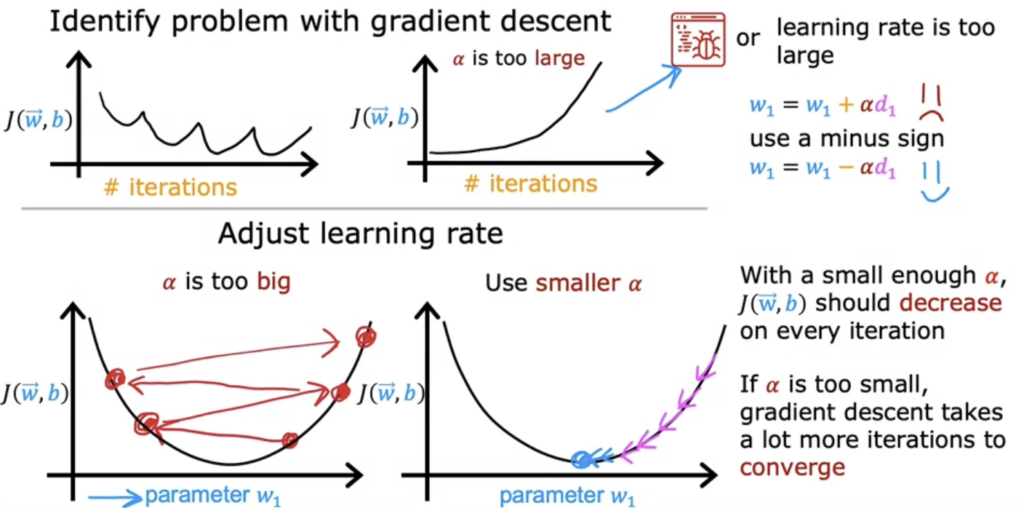

Choosing the learning rate

The graph taugh in Choosing the learning rate is helpful when develping models:

Feature Engineering

When I first started Andrew Ng’s Supervised Machine Learning course, I didn’t really realize how much of an impact feature engineering could have on a model’s performance. But boy, was I in for a surprise! As I worked through the course, I quickly realized that the raw data we start with is rarely good enough for building a great model. Instead, it needs to be transformed, scaled, and cleaned up — that’s where feature engineering comes into play.

Feature engineering is all about making your data more useful for a machine learning algorithm. Think of it like preparing ingredients for a recipe — the better the quality of your ingredients, the better the final dish will be. Similarly, in machine learning, the features (the input variables) need to be well-prepared to help the algorithm understand patterns more easily. Without this step, even the most powerful algorithms might not perform at their best.

In the course, Andrew Ng really breaks it down and explains how important feature scaling and transformation are. In one of the early lessons, he used the example of linear regression — a simple algorithm that relies on understanding the relationship between input features and the output. If the features are on vastly different scales, it can throw off the whole process and make training the model take much longer. This was something I had never considered before, but it made so much sense once he explained it.

I remember struggling a bit with the idea of scaling features at first. Some of the variables in the data might be on completely different scales, like the size of a house in square feet versus the number of bedrooms. Features like the size of a house might have values in the thousands, while the number of bedrooms might only range from 1 to 5. Without scaling, the larger feature would dominate the learning process, and the model would be biased toward it. Learning how to scale these features properly made a huge difference in getting the algorithm to work more efficiently.

One of the key takeaways from this part of the course was the importance of understanding your data. Andrew Ng emphasizes that feature engineering is not just about applying transformations, but also about using your knowledge of the problem to make the data more relevant. For example, I learned that I could create new features from existing ones. If I had data about the size of a house and the number of bedrooms, I could create a new feature like "bedrooms per square foot," which might give the model more useful information to work with.

As the course progressed, I saw how important feature engineering is not just for scaling but also for improving model performance in more complex algorithms like logistic regression and neural networks. It was all about creating features that made it easier for the algorithm to find patterns and make predictions.

To be honest, at first, I didn’t realize how much of a difference feature engineering could make. But after practicing it through the course, I saw firsthand how tweaking and transforming features could lead to much better results. It felt like I had unlocked a new level in the course, where I wasn’t just feeding data into the algorithm, but actually working with it to make the algorithm smarter. Feature engineering really turned out to be one of the most rewarding parts of learning machine learning, and it’s something I’ll definitely keep honing as I continue on this journey.

It’s the end of this learning week?!

Didn't expect that I reached the end of this learning week!

I didn't spend much time on the labs. There are three labs in this learning week and I am afaid I might not be ready for the practice lab of this week.

Will come back to udpate more details after my exercises.

Ps. feel free to check out my other posts in Supervised Machine Learning Journey.