# Supervised Machine Learning - Day 1

Table of Contents

The Beginning

As I’ve been advancing technologies of my AI-powered product knowlege base chatbot which based on Django/LangChain/OpenAI/Chroma/Gradio which is sitting on AI application/framework layer, I also have kept an eye on how to build a pipeline for assessing the accuracy of machine learning models which is a part of AI Devops/infra.

But I realized that I have no idea how to meature a model’s accuracy. This makes me upset.

Then I started looking for answers.

My first google search on this is “how to measure llm accuracy”, it brought me to Evaluating Large Language Models (LLMs): A Standard Set of Metrics for Accurate Assessment, it’s informative. It’s not a lengthy article and I read through it. This opens a new world to me.

There are standard set of metrics for evaluating LLMs, including:

-

Perplexity - A measure of how well a language model predicts a sample of text. It is calculated as the inverse probability of the test set normalized by the number of words.

-

Accuracy - It is a measure of how well a language model makes correct predictions. It is calculated as the number of correct predictions divided by the total number of predictions.

Accuracy can be calculated using the following formula: accuracy = (number of correct predictions) / (total number of predictions). -

F1-score - It is a measure of a language model’s balance between precision and recall. It is calculated as the harmonic mean of precision and recall.

-

ROUGE score - It is a measure of how well a language model generates text that is similar to reference texts. It is commonly used for text generation tasks such as summarization and paraphrasing.

There are different ways to calculate ROUGE score, including ROUGE-N, ROUGE-L, and ROUGE-W. -

BLEU score - This is to measure how well a language model generates text that is fluent and coherent. It is commonly used for text generation tasks such as machine translation and image captioning.

-

METEOR score - It is about how well a language model generates text that is accurate and relevant. It combines both precision and recall to evaluate the quality of the generated text.

-

Question answering metrics - Question answering metrics are used to evaluate the ability of a language model to provide correct answers to questions. Common metrics include accuracy, F1-score, and Macro F1-score.

Question answering metrics can be calculated by comparing the generated answers to one or more reference answers and calculating a score based on the overlap between them.

Lets say we have a language model that is trained to answer questions about a given text. We test the model on a set of 100 questions, and the generated answers are compared to the actual answers. The accuracy, F1-score, and Macro F1-score of the model are calculated based on the overlap between the generated answers and the actual answers. -

Sentiment analysis metrics - Sentiment analysis metrics are used to evaluate the ability of a language model to classify sentiments correctly. Common metrics include accuracy, weighted accuracy, and macro F1-score.

-

Named entity recognition metrics - It is used to evaluate the ability of a language model to identify entities correctly. Common metrics include accuracy, precision, recall, and F1-score.

-

Contextualized word embeddings - It is used to evaluate the ability of a language model to capture context and meaning in word representations. They are generated by training the language model to predict the next word in a sentence given the previous words.

Lets say we have a language model that is trained to generate word embeddings for a given text. We test the model on a set of 100 texts, and the generated embeddings are compared to the actual embeddings. The evaluation can be done using various methods, such as cosine similarity and Euclidean distance.

I don’t know all of them and where to start!

I have to tell meself, “Man, you don’t know machine learning…” So my next search was “machine learning course”, Andrew Ng’s Supervised Machine Learning: Regression and Classification now came on top of the google search results! It’s so famous and I knew this before!

Then I made a decision, I want to take action now and finish it thoroughly!

I immedially enrolled into the course. Now let’s start the journey!

Day 1 Started

Basics

1. What is ML?

Defined by Arthur Samuel back in the 1950 😯

“Field of study that gives computers the ability to learn without being explicitly programmed.”

The above claims gaves the key point (The highlighted part) which could answer the question from one of my colleague who asked me “what’s the difference between a programed system trigerring alert on events than a AI-powered system which also triggering alert on events.”, he couldn’t tell the differences. One that day, I’ve tried to explain but couldn’t find the right word. Now I found it.

2. What are major ML algorithms?

-

Supervised Learning

-

Unsupervised Learning

-

Reinforcement Learning

3. Supervised Learning

Examples: visual inspection, self-driving car, online advertising, machine translation, speech recognition, spam filtering.

3.1. Regression (Example, house price prediction)

By regression, we mean by predicting a number from infinitely many possible output

3.2. Classification (Example, Breast Cancer Detection)

Malignant, Benign

By classification, we mean by predicting categories, categories don’t have to be numbers, it could be non-numeric.

It can predict whether a picture is that of a cat or a dog. And it can predict if a tumor is benign or malignant. Categories can also be numbers like 0, 1 or 0, 1, 2. But what makes classification different from regression when you’re interpreting the numbers is that classification predicts a small finite limited set of possible output categories such as 0, 1 and 2 but not all possible numbers in between like 0.5 or 1.7.

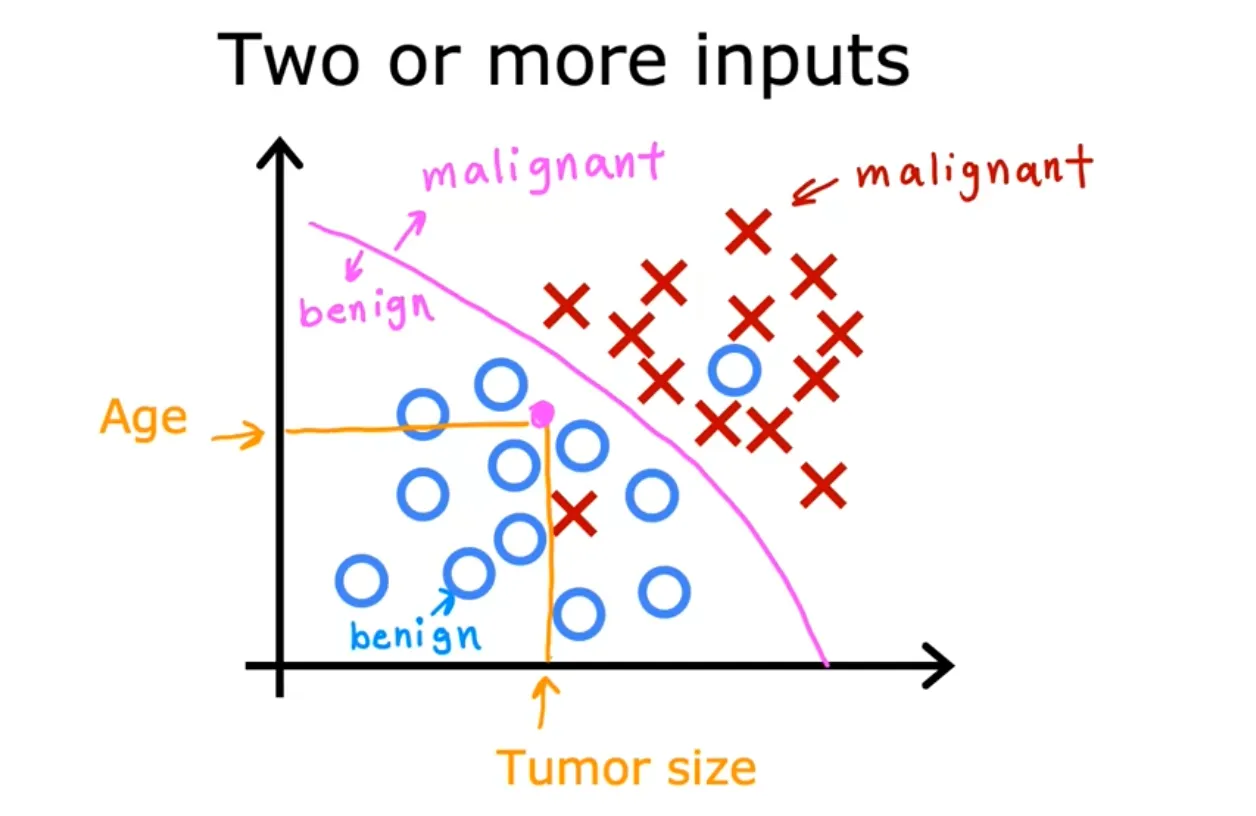

First example for predicting cancer has only one input, the size of the tumor. Classification also works with more inputs, like this:

The key is to find out the boundary of benign and malignant.

4. Unsupervised Learning

Unsupervised learning is to find something interesting in unlabled data, which involved algorithms like clustering algorithm.

4.1 Clustering algorithm

Google news used clustering algorithm.

In other workds, clustering algorithm, takes data without labels and tries to automatically group them (similar) into clusters by finding some structure or some pattern or something interesting in the data.

4.2. Anomaly Detection

Find unusual data.

4.3 Dementionality Reduction

Compress data using fewer numbers.

Regression Model

Selling house example, building a linear model to predict how much the house could sell for.

Any supervised learning model that predicts a number such as 220,000 or 1.5 or negative 33.2 is addressing what’s called a regression problem.

Linear regression is one example of a regression model.

Linear Regression

Or simpler format:

Now the question is, how do you find values for and so that the prediction is close to the true target for many or maybe all training examples ?

How to measure how well a line fits the training data?

When measuring the error (ŷ - y), for example i, we’ll compute this squared error term.

Square error cost function:

Well, I spent almost 20mins to figure out how to write math formula on Wordpress, look! 🥳

The extra division by 2 is just meant to make some of our later calculations look neater, but the cost function still works whether you include this division by 2 or not.

The final one:

This graph compare (when b = 0) is important!

The Challenge Part in Today’s Learning Journey

“Visualizing the cost function” is most challenge…

I watched it at least twice then got the idea.

Compare Regression/Classification Models

| Items | Regression | Classfication | Comments |

|---|---|---|---|

| Output | Infinitely | Finite |

Terminology

| Term | Comments |

|---|---|

| Discrete Category | |

| Training Set | The data set we used to train model |

| input variable or feature or input feature | Denote as |

| target variable | Denote as |

| Indicate the single training example | |

| -th training example | |

| hypothesis | "" means function, historically, it’s called hypothesis. |

| y hat (On Mac press Option + i then followed by the letter y, then you can get ŷ). In machine learning, the convention is that y-hat is the estimate or the prediction for y. | |

| When the symbol is just the letter y, then that refers to the target, which is the actual true value in the training set. | |

| This differences is called “Error”. We’re measuring how far off to prediction is from the target | |

| parabola curve | You know, quadratic function |

| Univariate | Uni means one in Latin. Univariate is just a fancy way of saying one variable |

| parameters/coefficients/weights | In machine learning parameters of the model are the variables you can adjust during training in order to improve the model. |

| downward-sloping line | |

| hammock | Have some fun… |

| contour plot | |

| topographical | Learning some geography when learning ML … |

| gradient descent | This algorithm is one of the most important algorithms in machine learning. Gradient descent and variations on gradient descent are used to train, not just linear regression, but some of the biggest and most complex models in all of AI. |