# Supervised Machine Learning – Day 6

Table of Contents

Today I spent 10 30 60 mins reviewing previous notes, just realized that’s a lot.

I am amazing 🤩

Today start with “Gradient descent for multiple linear regression”.

Gradient descent for multiple linear regression

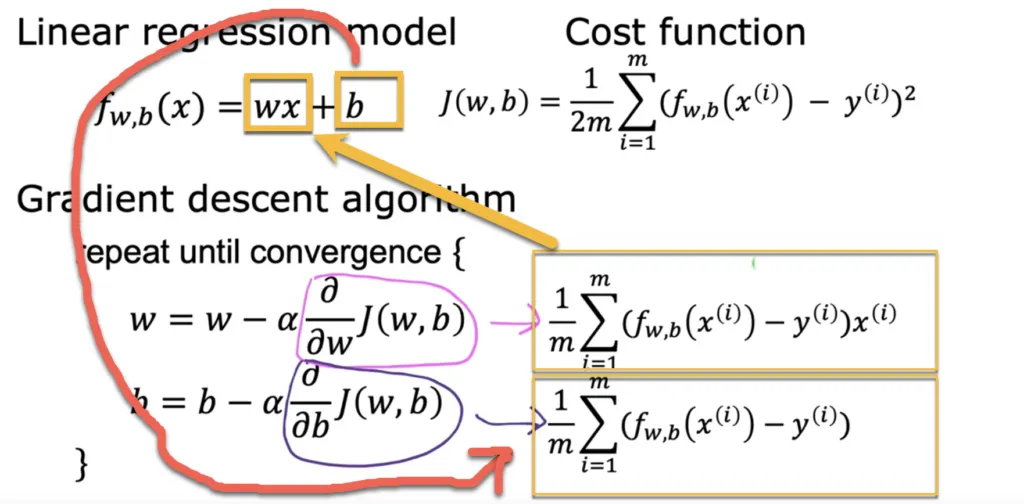

Holy… At the beginning Andrew throw out below and said hope you still remember, I don’t:

Why I couldn’t recognize… Where does this come?

I spent 30mins to review several previous videos, then found it… The important videos are:

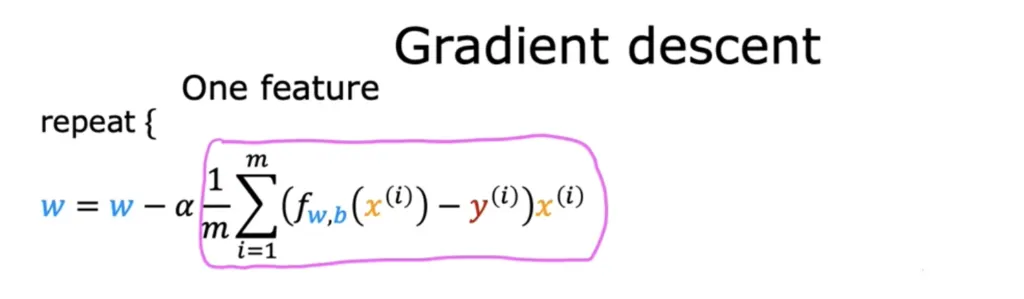

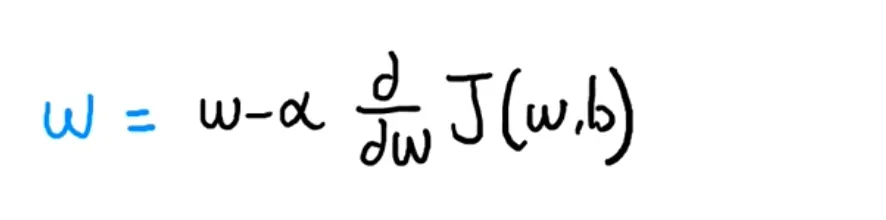

1. Week 1 Implementing gradient descent, Andrew just wrote down below without explaining (he explained later)

2. Gradient descent for linear regression

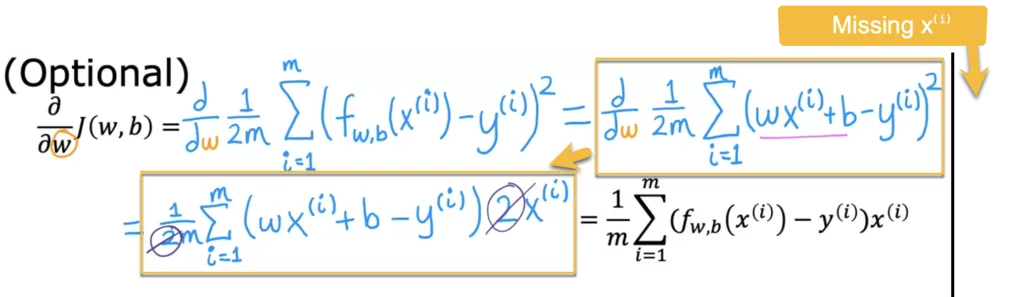

Holy! Found a mistake in Andrew’s course!

On above screenshot, Andrew lost x(i) at the end of the first line!WOW! I ROCK! Spent almost 60mins!

I am done for today!

The situation reversed an hour later

But I felt upset, I was NOT convinced I found the simple mistake especially in Andrew’s most popular Machine learning course!

I started trying to resolve the fomula.

And… I found out I was indeed too young too naive… Andrew was right…

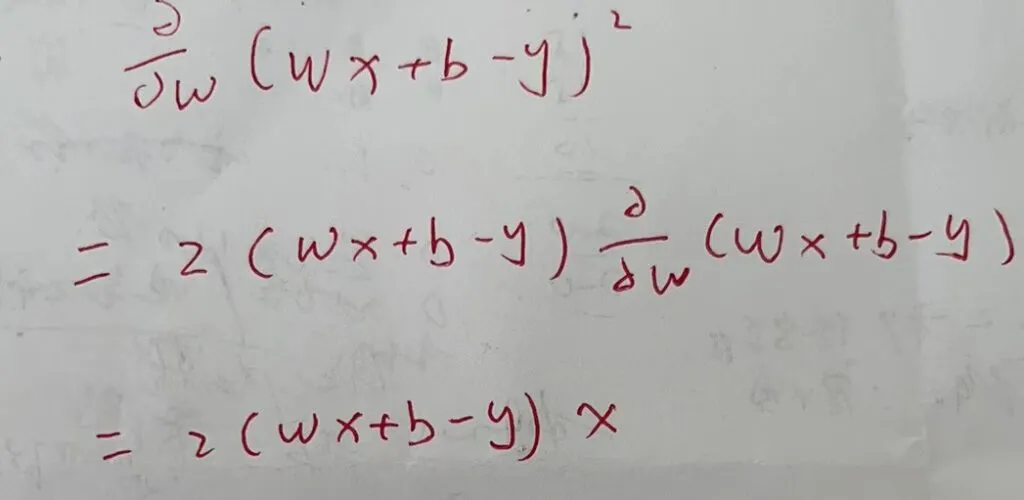

I got help from my college classmate who has been dealing with calculus everyday for more than 20 years…

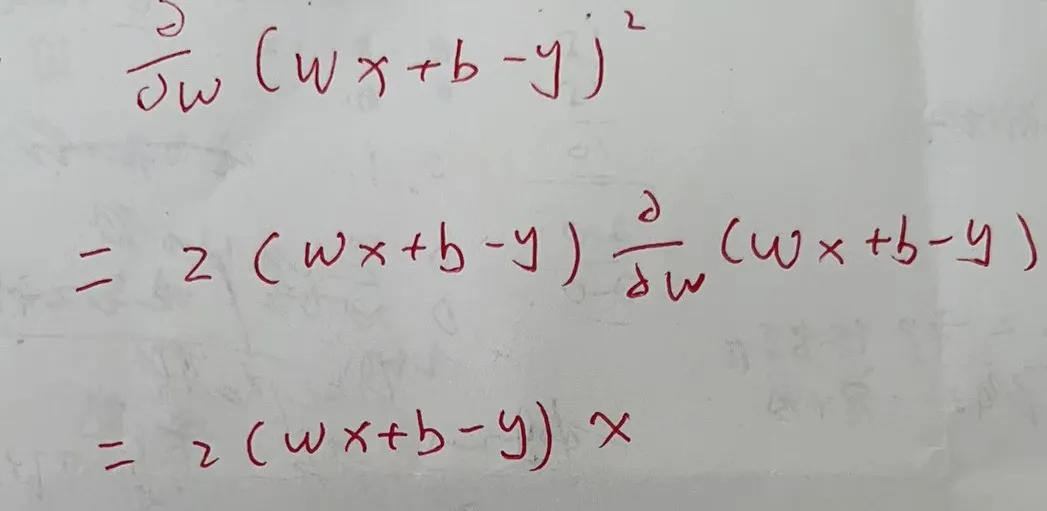

This is the derivation process of the formula written by him:

He said this to me like my math teacher in college:

Chain rule, deriving step by step.

If you still remember, I have mentined Parul Pandey’s Understanding the Mathematics behind Gradient Descent in my previous post Supervised Machine Learning – Day 2 & 3 – On My Way To Becoming A Machine Learning Person, in her post she did mentioned:

Primarily we shall be dealing with two concepts from calculus :

Power rule and chain rule.

Well, she is right as well 😁

So happy I leant a lot today!