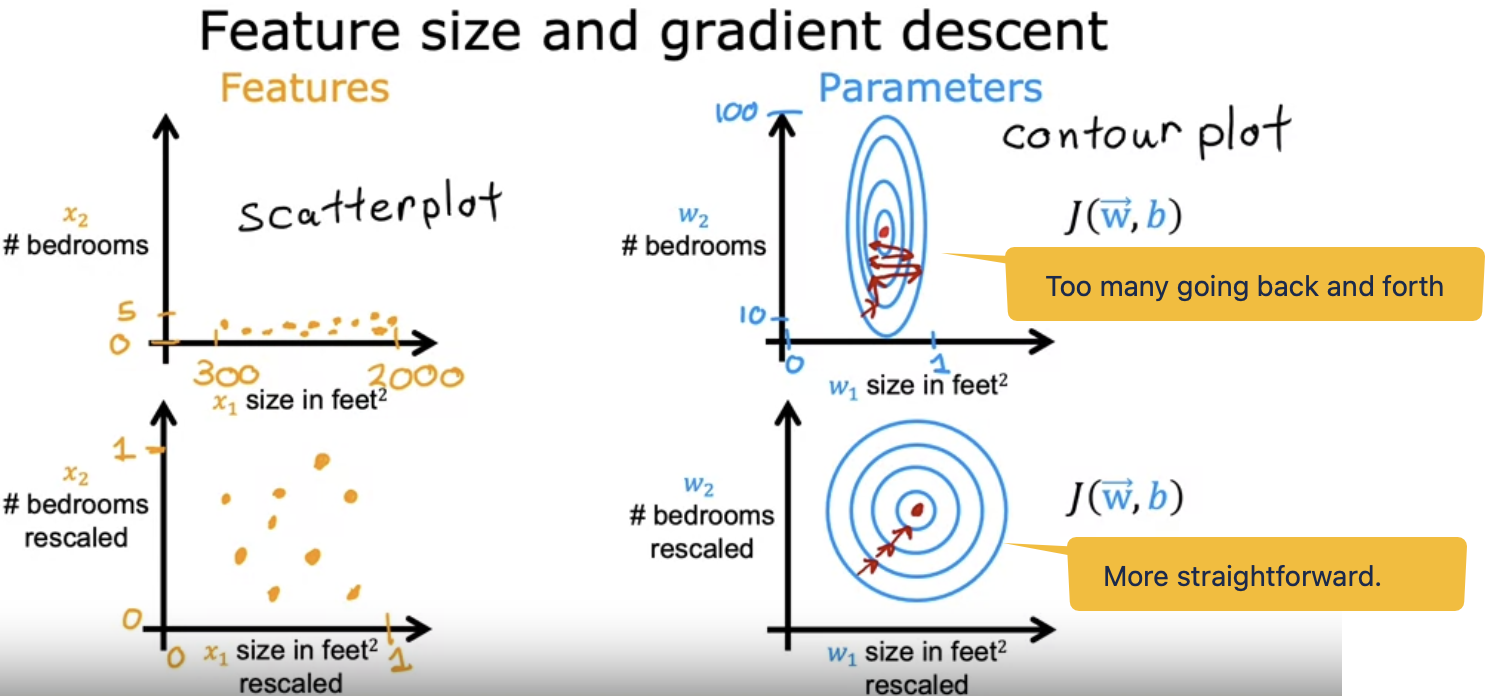

Welcome back I didn't get much time working on the course in past 5 days!!! Finally resuming today! Today I reviewed Feature scaling part 1 and learned Feature scaling part 2 and Checking gradient descent for convergence. The difficulty of the course is getter harder, 20mins video, I spent double time and needed to checking external articles to get better understanding. Feature Scaling Trying to understand what is "Feature Scaling"... What are features and parameters in below formula? hat of Price = w1x1 + w2x2 + b. x1 and x2 are features, former one represents size of house, later one represents number of bedrooms. w1 and w2 are parameters. When a possible range of values of a feature is large, it's more likely that a good model will learn to choose a relatively small parameter value. Likewise, when the possible values of the feature are small, like the number of bedrooms, then a reasonable value for its parameters will be relatively large like 50. So how does this relate to grading descent? At the end of this video, Andrew explained that the features need to be re-scaled or transformed sl that the cost function J using the transfomed data would shape better and gradient descent can find a much more direct path to the global minimum. When you have different features that take on very different ranges of values, it can cause gradient descent to run slowly but re scaling the different features so they all take on comparable range of values. because speed, upgrade and dissent significantly. Andrew Ng One key aspect of feature engineering is…

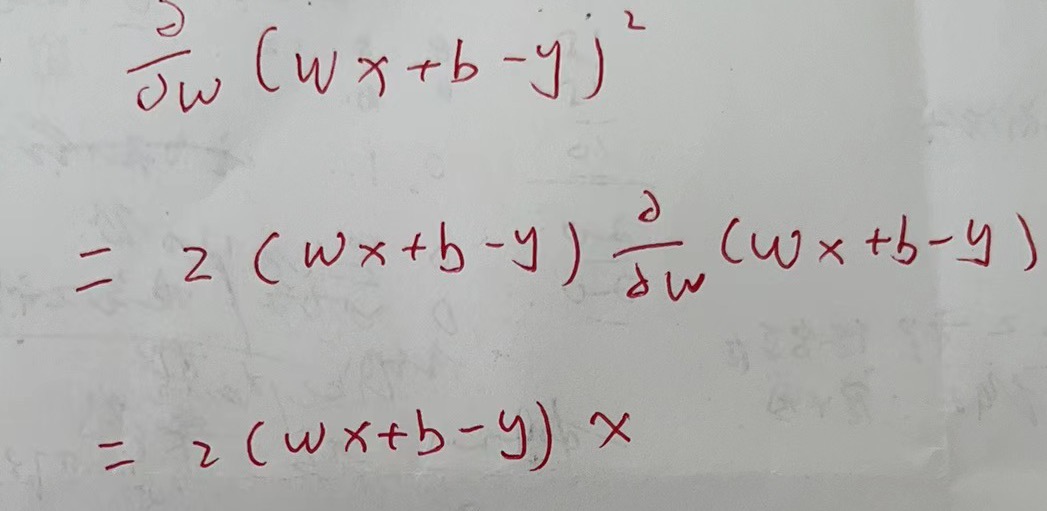

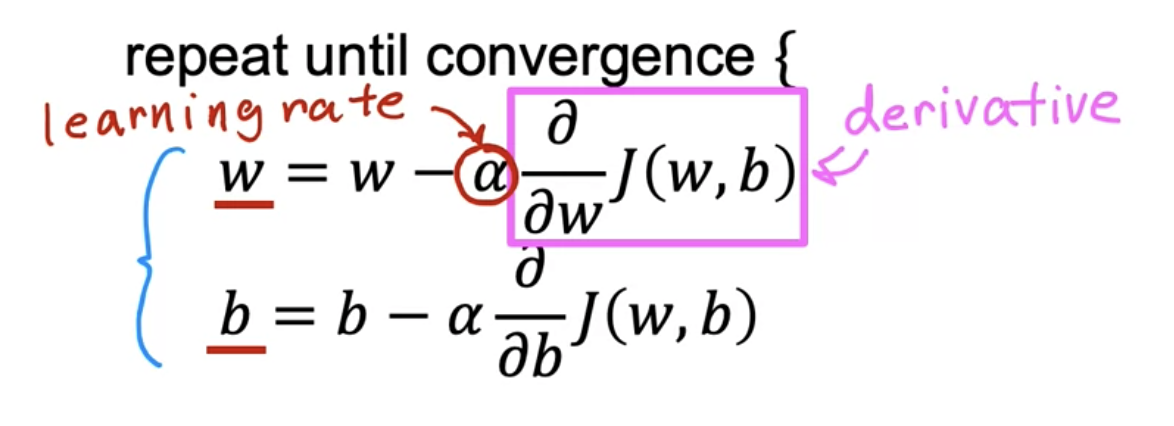

Today I spent 10 30 60 mins reviewing previous notes, just realized that's a lot. I am amazing 🤩 Today start with "Gradient descent for multiple linear regression". Gradient descent for multiple linear regression Holy... At the beginning Andrew throw out below and said hope you still remember, I don't: Why I couldn't recognize... Where does this come? I spent 30mins to review several previous videos, then found it... The important videos are: 1. Week 1 Implementing gradient descent, Andrew just wrote down below without explaining (he explained later) 2. Gradient descent for linear regression Holy! Found a mistake in Andrew's course!On above screenshot, Andrew lost x(i) at the end of the first line! WOW! I ROCK! Spent almost 60mins! I am done for today! The situation reversed an hour later But I felt upset, I was NOT convinced I found the simple mistake especially in Andrew's most popular Machine learning course! I started trying to resolve the fomula. And.... I found out I was indeed too young too naive... Andrew was right... I got help from my college classmate who has been dealing with calculus everyday for more than 20 years... This is the derivation process of the formula written by him: He said this to me like my math teacher in college: Chain rule, deriving step by step. If you still remember, I have mentined Parul Pandey’s Understanding the Mathematics behind Gradient Descent in my previous post Supervised Machine Learning – Day 2 & 3 – On My Way To Becoming A Machine Learning Person, in her post she…

Mastering Multiple Features & Vectorization: Supervised Machine Learning – Day 4 and 5

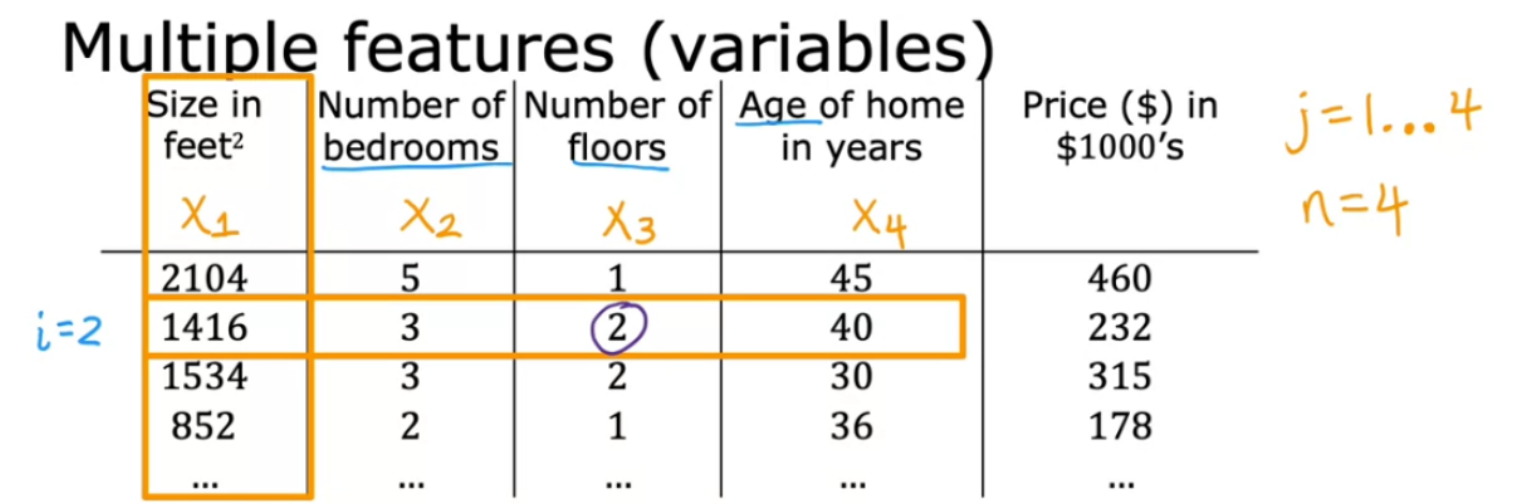

So difficult to manage some time on this Day 4 was a long day for me, just got 15mins before bed to quickly skim through the video "multiple features" and "vectorization part 1". Day 5, a longer day than yesterday... went to urgent care in morning... then back-to-back meeting after come back.... lunch... back-to-back meeting again... need to step out again... Anyway, that's life. Multiple features (variables) and Vectorization In "multiple features", Andrew uses crispy language explained how to simplify the multiple features formula by using vector and dot product. In "Part 1", Andrew introduced how to use NumPy to do dot product and said GPU is good at this type of calculation. Numpy function can use parallel hardware (like GPU) to make dot product fast. In "Part 2", Andrew further introduced why computer can do dot product fast. He used gradient descent as an example. The lab was informative, I walked through all of them though I've known most of them before. More linkes about Vectorization can be find here. Questions for helping myself learning I created the following questions to test my knowledge later. What is x(4)1 in above graph? Ps. feel free to check out the series of my Supervised Machine Learning journey.

Supervised Machine Learning – Day 2 & 3 - On My Way To Becoming A Machine Learning Person

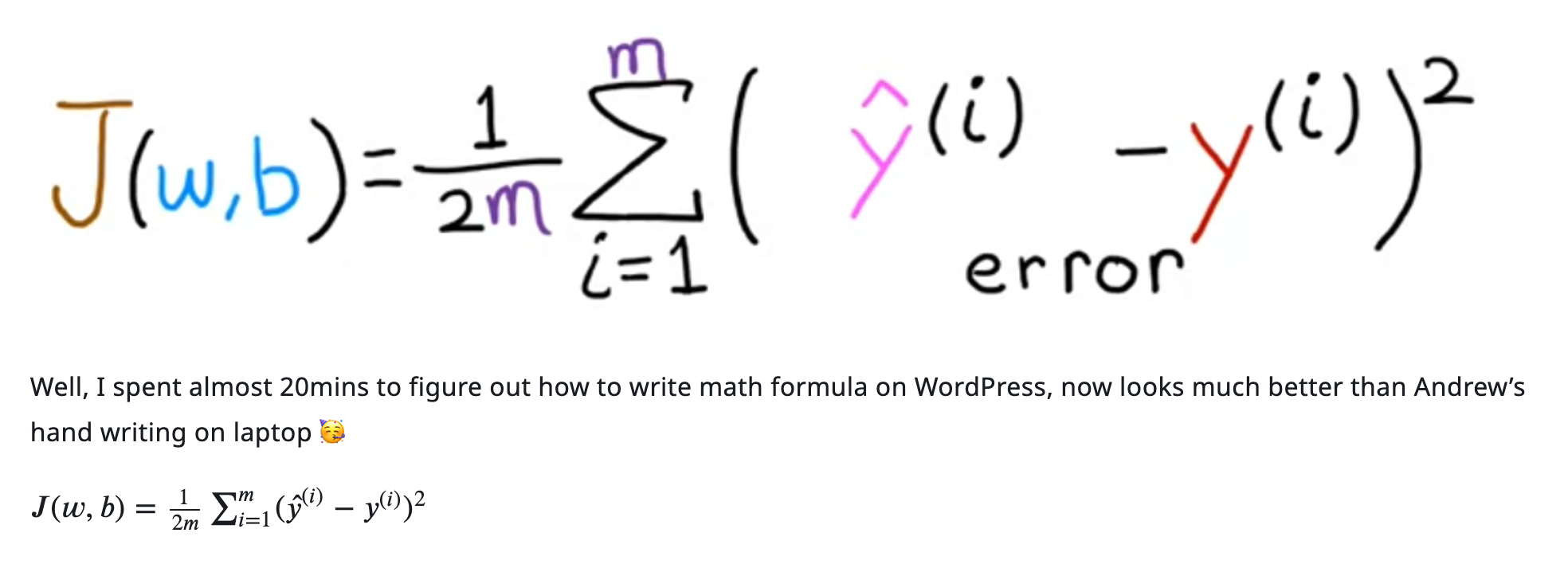

A brief introduction Day 2 I was busy and managed only 15 mins for "Supervised Machine Learning" video + 15 mins watched But what is a neural network? | Chapter 1, Deep learning from 3blue1brown. Day 3 I managed 30+ mins on "Supervised Machine Learning", and some time spent on articles reading, like Parul Pandey's Understanding the Mathematics behind Gradient Descent, it's really good. I like math 😂 So this notes are mixed. Notes Implementing gradient descent Notation I was struggling in writting Latex for the formula, then found this table is useful (source is here): Andrew said I don't need to worry about the derivative and calculas at all, I trust him, next I dived into my bookcases and found out my advanced mathmatics books used in college, and spent 15 mins to review, yes, I don't need. Snapped two epic shots of my "Advanced Mathematics" book used in my college time to show off my killer skills in derivative and calculus - pretty sure I've unlocked Math Wizard status! Reading online Okay. Reading some online articles. If we are able to compute the derivative of a function, we know in which direction to proceed to minimize it (for the cost function). From Parul Pandey, Understanding the Mathematics behind Gradient Descent Parul briefly introduced Power rule and Chain rule, fortunately, I still remember them learnt from colleage. I am so proud. After reviewing various explanations of gradient descent, I truly appreciate Andrew's straightforward and precise approach! He was kiddish some times drawing a stick man walking down a hill…

The Beginning As I've been advancing technologies of my AI-powered product knowlege base chatbot which based on Django/LangChain/OpenAI/Chroma/Gradio which is sitting on AI application/framework layer, I also have kept an eye on how to build a pipeline for assessing the accuracy of machine learning models which is a part of AI Devops/infra. But I realized that I have no idea how to meature a model's accuracy. This makes me upset. Then I started looking for answers. My first google search on this is "how to measure llm accuracy", it brought me to Evaluating Large Language Models (LLMs): A Standard Set of Metrics for Accurate Assessment, it's informative. It's not a lengthy article and I read through it. This opens a new world to me. There are standard set of metrics for evaluating LLMs, including: I don't know all of them and where to start! I have to tell meself, "Man, you don't know machine learning..." So my next search was "machine learning course", Andrew Ng's Supervised Machine Learning: Regression and Classification now came on top of the google search results! It's so famous and I knew this before! Then I made a decision, I want to take action now and finish it thoroughly! I immedially enrolled into the course. Now let's start the journey! Day 1 Started Basics 1. What is ML? Defined by Arthur Samuel back in the 1950 😯 "Field of study that gives computers the ability to learn without being explicitly programmed." The above claims gaves the key point (The highlighted part) which could answer the question from…

The Font Problem in VSCode After done the configuration in Terminal Mastery: Crafting A Productivity Environment With ITerm, Tmux, And Beyond, we got a nice terminal: However, after I installed VSCode, the terminal couldn't display certain glyphs, it looks like this: The Fix We need to fix it by updating the font family in VSCode. 1. Identify the name of font family. Open Font Book on Mac, we can see: The font supports those glyphs is "MesloLGM Nerd Font Mono", that's also what I configured for iTerm2. 2. Go to VSCode, go to Command + comma, go to settings, search "terminal.integrated.fontFamily", set the font name as below: 3. Now we can see it displays correctly: Well done!

I love working on Linux terminals Rewind a decade or so, and you'd find me ensconced within the embrace of a Linux terminal for the duration of my day. Here, amidst the digital ebb and flow, I thrived—maneuvering files and folders with finesse, weaving code in Vim, orchestrating services maintenance, decoding kernel dumps, and seamlessly transitioning across a mosaic of tmux sessions. The graphical user interface? A distant thought, unnecessary for the tapestry of tasks at hand. Like all geeks, every tech enthusiast harbors a unique sanctuary of productivity—a bespoke digital workshop where code flows like poetry, and ideas ignite with the spark of creativity. It’s a realm where custom tools and secret utilities interlace, forming the backbone of unparalleled efficiency and innovation. Today, I'm pulling back the curtain to reveal the intricacies of my personal setup on Mac. I invite you on this meticulous journey through the configuration of my Mac-based development sanctuary. Together, let's traverse this path, transforming the mundane into the magnificent, one command, one tool, one revelation at a time. iTerm2 After account setup on Mac, the initial terminal looks like this when I logged in: Let's equip it with iTerm2! What is iTerm2? iTerm2 is a replacement for Terminal and the successor to iTerm. It works on Macs with macOS 10.14 or newer. iTerm2 brings the terminal into the modern age with features you never knew you always wanted. Why Do I Want It? Check out the impressive features and screenshots. If you spend a lot of time in a terminal, then you'll appreciate all the…

Hey there! Recently, I encountered some encoding issues. Then I realized that, looks like I haven't seen any articles give a crispy yet interesting explanation on Base64/Base64URL/Base32 encoding! Ah! I should write one! So, grab your gear, and let's decode these fascinating encoding schemes together! The Enigma of Base64 Encoding Why do we need Base64? Imagine you're sending a beautiful picture postcard through the digital world, but the postal service (the internet, in this case) only handles plain text. How do you do it? Enter Base64 encoding – it's like magic that transforms binary data (like images) into a text format that can easily travel through the internet without getting corrupted. Base64 takes your binary data and represents it as text using 64 different characters: In more details, it will: It's widely used in email attachments, data URLs in web pages, and anywhere you need to squeeze binary data into text-only zones. A simple text like "Hello!" when encoded in Base64, turns into "SGVsbG8h". Usage of Base64 in Data URIs Data URIs (Uniform Resource Identifiers) offer a powerful way to embed binary data, such as images, directly into HTML or CSS files, using Base64 encoding. This method eliminates the need for external file references, resulting in fewer HTTP requests and potentially faster page loads. Here's how it works in practice: Embedding an Image in HTML Using Data URI Let's say you have a small logo or icon that you want to include directly in your HTML page without linking to an external file. You can use Base64 to encode the…

Greetings Hi there! I was trying some new stuff about VUE recently. I downloaded a free version of VUE Argon dashboard code and tried to compile it locally. It's straghtforward: Then I got the dist folder: Interesting... Then I double clicked the index.html, expecting it will display the beautiful landing page, but it didn't happen... This is strange... What went wrong? I tried npm run serve, it works well, I can see the portal and navigate between pages without issues. I must fix this! Should be quick! Bingo! The root cause is that the VUE project used router with createWebHistory instead of createWebHashHistory! It resulted a differenve ways to handle static assets and routing. Using createWebHistory in Production environment is required as it provides several significant benefits: I just want to use createWebHashHistory in my local development environment. The fix Now, the fix is easy. First, modify scripts in package.json to specify mode for serve and build, and I added two new items serve_prod and build_dev: Second, creating or editing vue.config.js as below: Lastly, update src/router/index.js to handle the mode accordingly: The original code was: Now it looks like this: Now, run npm run build_dev again, I can see the portal 😎 Thanks for reading! Have a good day! Thanks for reading! Have a good day!

The Background... I have Django/Vue development environment running locally. To streamline my Django development, I typically open six tmux windows 😎 : I used one Tmux session to hold all above. However, my laptop sometimes needs to reboot, after reboot, all of my windows are gone 😓 I have configured tmux-resurrect and tmux-continuum to try to handle this scenario, but they couldn't re-run those commands even they could restore the windows correctly. Let me show you the screenshots. The problem... Typically, my development windows look like this: As you see, the services are running within the respective windows. If I save them with tmux-resurrect, after reboot, of course tmux-resurrect and tmux-continuum could restore them, but services and all environment variables are gone. To simulate, let me kill all sessions in tmux, check the output: Now start tmux again, here are the status I can see, tmux restored the previous saved windows: Let's check the window now: None of the services is running 🙉 The Complain... As the supreme overlord of geekcoding101.com, I simply cannot let such imperfection slide. Not on my watch. Nope, not happening. This ain't it, chief. Okay, let's fix it! The Fix... .... Okay! I wrote a script.. oh no! Two scripts! One is called start_tmux_dev_env.sh to create all windows, it will invoke prepare_dev_env.sh which export functions to initialize environment variables in specific windows. A snippet of start_tmux_dev_env.sh: The prepare_dev_env.sh looks like: The End... Now, after reboot, I can just invoke script start_tmux_dev_env.sh and it will spin up all windows for me in seconds! I'M Really Pround…