# Terms Used in "Attention is All You Need"

Below is a comprehensive table of key terms used in the paper “Attention is All You Need,” along with their English and Chinese translations. Where applicable, links to external resources are provided for further reading.

| English Term | Chinese Translation | Explanation | Link |

|---|---|---|---|

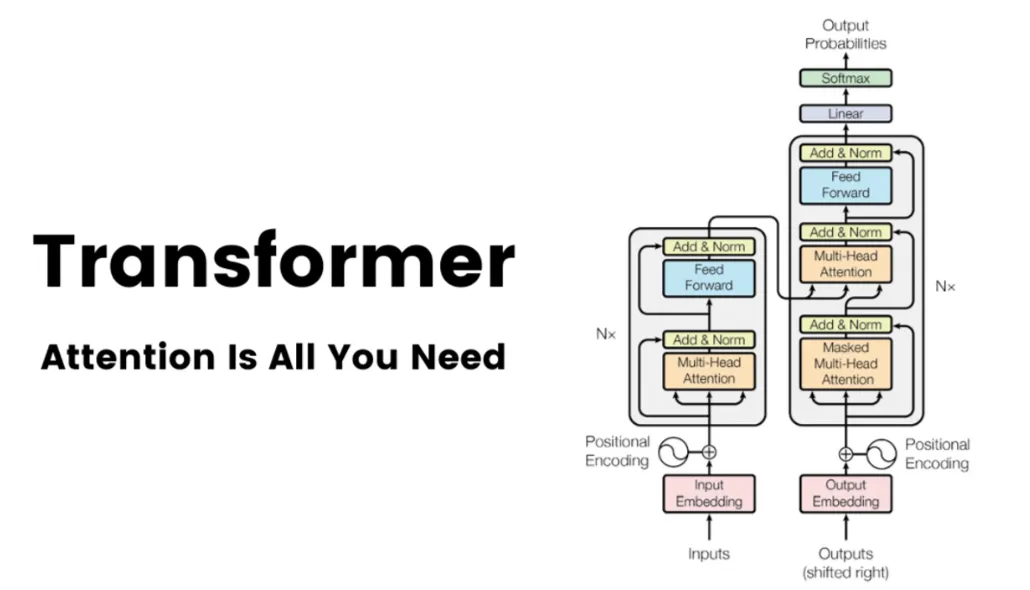

| Encoder | 编码器 | The component that processes input sequences. | |

| Decoder | 解码器 | The component that generates output sequences. | |

| Attention Mechanism | 注意力机制 | Measures relationships between sequence elements. | Attention Mechanism Explained |

| Self-Attention | 自注意力 | Focuses on dependencies within a single sequence. | |

| Masked Self-Attention | 掩码自注意力 | Prevents the decoder from seeing future tokens. | |

| Multi-Head Attention | 多头注意力 | Combines multiple attention layers for better modeling. | |

| Positional Encoding | 位置编码 | Adds positional information to embeddings. | |

| Residual Connection | 残差连接 | Shortcut connections to improve gradient flow. | |

| Layer Normalization | 层归一化 | Stabilizes training by normalizing inputs. | Layer Normalization Details |

| Feed-Forward Neural Network (FFNN) | 前馈神经网络 | Processes data independently of sequence order. | Feed-Forward Networks in NLP |

| Recurrent Neural Network (RNN) | 循环神经网络 | Processes sequences step-by-step, maintaining state. | RNN Basics |

| Convolutional Neural Network (CNN) | 卷积神经网络 | Uses convolutions to extract features from input data. | CNN Overview |

| Parallelization | 并行化 | Performing multiple computations simultaneously. | |

| BLEU (Bilingual Evaluation Understudy) | 双语评估替代 | A metric for evaluating the accuracy of translations. | Understanding BLEU |

This table provides a solid foundation for understanding the technical terms used in the “Attention is All You Need” paper. If you have questions or want to dive deeper into any term, the linked resources are a great place to start!