1. What Is an Embedding? An embedding is the “translator” that converts language into numbers, enabling AI models to understand and process human language. AI doesn’t comprehend words, sentences, or syntax—it only works with numbers. Embeddings assign a unique numerical representation (a vector) to words, phrases, or sentences. Think of an embedding as a language map: each word is a point on the map, and its position reflects its relationship with other words. For example: “cat” and “dog” might be close together on the map, while “cat” and “car” are far apart. 2. Why Do We Need Embeddings? Human language is rich and abstract, but AI models need to translate it into something mathematical to work with. Embeddings solve several key challenges: (1) Vectorizing Language Words are converted into vectors (lists of numbers). For example: “cat” → [0.1, 0.3, 0.5] “dog” → [0.1, 0.32, 0.51] These vectors make it possible for models to perform mathematical operations like comparing, clustering, or predicting relationships. (2) Capturing Semantic Relationships The true power of embeddings lies in capturing semantic relationships between words. For example: “king - man + woman ≈ queen” This demonstrates how embeddings encode complex relationships in a numerical format. (3) Addressing Data Sparsity Instead of assigning a unique index to every word (which can lead to sparse data), embeddings compress language into a limited number of dimensions (e.g., 100 or 300), making computations much more efficient. 3. How Are Embeddings Created? Embeddings are generated through machine learning models trained on large datasets. Here are some popular methods: (1) Word2Vec One of…

1. What is Prompt Engineering? Prompt Engineering is a core technique in the field of generative AI. Simply put, it involves crafting effective input prompts to guide AI in producing the desired results. Generative AI models (like GPT-3 and GPT-4) are essentially predictive tools that generate outputs based on input prompts. The goal of Prompt Engineering is to optimize these inputs to ensure that the AI performs tasks according to user expectations. Here’s an example: Input: “Explain quantum mechanics in one sentence.” Output: “Quantum mechanics is a branch of physics that studies the behavior of microscopic particles.” The quality of the prompt directly impacts AI performance. A clear and targeted prompt can significantly improve the results generated by the model. 2. Why is Prompt Engineering important? The effectiveness of generative AI depends heavily on how users present their questions or tasks. The importance of Prompt Engineering can be seen in the following aspects: (1) Improving output quality A well-designed prompt reduces the risk of the AI generating incorrect or irrelevant responses. For example: Ineffective Prompt: “Write an article about climate change.” Optimized Prompt: “Write a brief 200-word report on the impact of climate change on the Arctic ecosystem.” (2) Saving time and cost A clear prompt minimizes trial and error, improving efficiency, especially in scenarios requiring large-scale outputs (e.g., generating code or marketing content). (3) Expanding AI’s use cases With clever prompt design, users can leverage AI for diverse and complex tasks, from answering questions to crafting poetry, generating code, or even performing data analysis. 3. Core techniques in Prompt…

1. What Are Parameters? In deep learning, parameters are the trainable components of a model, such as weights and biases, which determine how the model responds to input data. These parameters adjust during training to minimize errors and optimize the model's performance. Parameter count refers to the total number of such weights and biases in a model. Think of parameters as the “brain capacity” of an AI model. The more parameters it has, the more information it can store and process. For example: A simple linear regression model might only have a few parameters, such as weights ( ww w) and a bias ( bb b). GPT-3, a massive language model, boasts 175 billion parameters, requiring immense computational resources and data to train. 2. The Relationship Between Parameter Count and Model Performance In deep learning, there is often a positive correlation between a model's parameter count and its performance. This phenomenon is summarized by Scaling Laws, which show that as parameters, data, and computational resources increase, so does the model's ability to perform complex tasks. Why Are Bigger Models Often Smarter? Higher Expressive Power Larger models can capture more complex patterns and features in data. For instance, they not only grasp basic grammar but also understand deep semantic and contextual nuances. Stronger Generalization With sufficient training data, larger models generalize better to unseen scenarios, such as answering novel questions or reasoning about unfamiliar topics. Versatility Bigger models can handle multiple tasks with minimal or no additional training. For example, OpenAI's GPT models excel in creative writing, code generation, translation, and…

So, I did a thing. I earned my first Microsoft certificate: Azure AI Engineer Associate! 🎉 Here is my story from training to passing the AI-102 exam. The Learning Journey of AI-102 The journey began with a four-and-a-half-day company-provided AI-102 training session. It was a mix of online classes and labs. I was actually on vacation during this period, so I only managed to focus for about three days, probably. The labs provided during the training were very useful. There were about 10 labs, each lab could be done up to 10 times, with each session lasting 1 to 3 hours. So I didn’t need to pay Microsoft to get familiar with the Azure AI environment. Roughly calculation, the training provides 100 to 200 hours of lab time available, but I only used about 20 hours before taking the exam. After the AI-102 training, I mainly sticked to Microsoft Learn: Designing and Implementing a Microsoft Azure AI Solution to fill gaps. Trust me, that's really helpful! The MS Learn modules helped me understand the concepts better. Cramming and Building Knowledge for AI-102 As the exam date got closer, I quickly skimmed John Savill’s Technical Training videos on YouTube for one time. His videos helped me build a complete knowledge framework in my head. One time is enough for me. Last but not least, please do read Areeb Pasha's AI-102 notes on Notion! Thanks to Areeb Pasha! It's so useful. These notes were like a concise version of MS Learn and made my studying very efficient. I managed to cover all…

Introduction Are you ready to delve into the exciting realm of Azure AI? Whether you're a seasoned developer or just starting your journey in the world of artificial intelligence, Microsoft Build offers a transformative opportunity to harness the power of AI. Recently I came across several good tutorials on Microsoft website, e.g. "CLOUD SKILLS CHALLENGE: Microsoft Build: Build multimodal Generative AI experiences". I enjoyed the learning on it. But I found out the very first step many people might seem as a challange: get az command work on Mac! So I decided to write down all my fix. Let's go! Resolution I am following up "Install Azure on Mac". Run command: But it failed with permission issue on openssl package: I fixed it by changing the permission of /usr/local/lib to current user, but it's not enough. I hit Python permission issue at a different location: So I had to apply the permission to /usr/local. So the command is: The screenshot of brew unlink command: Finally it finished installation successfully! Well done! Ps. You're welcome to access my other AI Insights blog posts at here.

Overfitting! Unlocking the Last Key Concept in Supervised Machine Learning – Day 11, 12

I finished the course! I really enjoyed the learning experiences in Andrew's course so far. Let's see what I've learn for the two days! Overfitting - The Last Topic of this Course! Overfitting It occurs when a machine learning model learns the details and noise in the training data to an extent that it negatively impacts the performance of the model on new data. This means the model is great at predicting or fitting the training data but performs poorly on unseen data, due to its inability to generalize from the training set to the broader population of data. The course explains that overfitting can be addressed by: We can't bypass underfitting. Overfitting and underfitting both are undesirable effects that suggest a model is not well-tuned to the task at hand, but they stem from opposite causes and have different solutions. Below two screenshots captured from course for my notes: Questions help me to master the content Words From Andrew At The End! I want to say congratulations on how far you've come and I want to say great job for getting through all the way to the end of this video. I hope you also work through the practice labs and quizzes. Having said that, there are still many more exciting things to learn. Awesome! I am already ready for next machine learning journeys!

Grinding Through Logistic regression: Exploring Supervised Machine Learning – Day 10

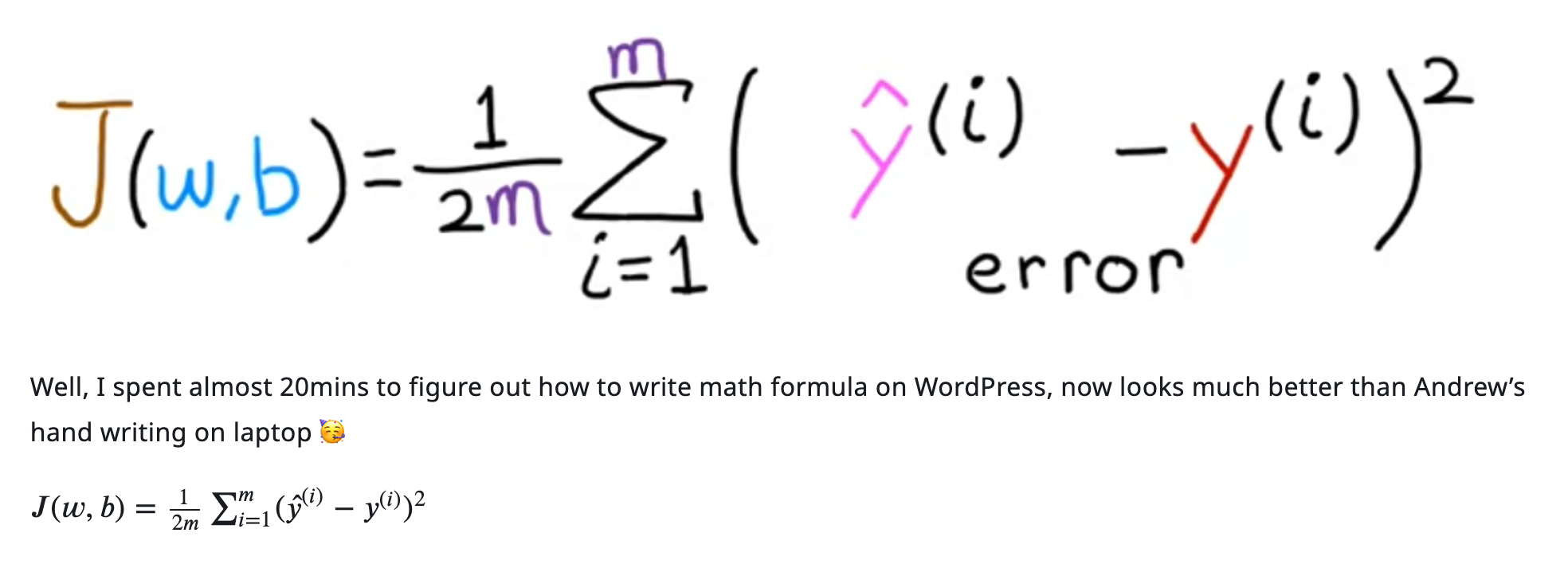

Let's continue! Today is mainly learning about "Decision boundary", "Cost function of logistic regresion", "Logistic loss" and "Gradient Descent Implementation for logistic regression". We found out the "Decision boundary" is when z equals to 0 in the sigmod function. Because at this moment, its value will be just at neutral position. Andrew gave an example with two variables, x1 + x2 - 3 (w1 = w2 = 1) the decision bounday is the line of x1 + x2 = 3. I want to say "Cost function for logistic regression" is the most hard in week 3 so far I've seen. I haven't quite figured out why the square error cost function not applicable and where the loss function came from. I have to re-watch the videos again. The lab is also useful. This particular cost function in above is derived from statistics using a statistical principle called maximum likelihood estimation (MLE). Questions and Answers Some thoughts of today Honestly, it feels like it's getting tougher and tougher. I can still get through the equations and derivations alright, it’s just that as I age, I feel like my brain is just not keeping up. At the end of each video, Andrew always congratulates me with a big smile, saying I’ve mastered the content of the session. But deep down, I really think what he's actually thinking is, "Ha, got you stumped again!" However, to be fair, Andrew really does explain things superbly well. I hope someday I can truly master this knowledge and use it effortlessly. Fighting! Ps. Feel free to…

As you know I was in progress learning Andrew Ng's Supervised Machine Learning: Regression and Classification, it's so dry! So I also spare some time to pick up some easy ML courses to help me to understand. Today I came across Machine Learning for Absolute Beginners - Level 1 and it's really easy and friendly to beginner. Finished in 2.5 hours - Maybe because I've made some good progress in Supervised Machine Learning: Regression and Classification and so feel it's easy. I want to share my notes in this blog post. Applied AI or Shallow AI Industry’s robot can handle specific small task which has been programmed, it’s called Applied AI or Shallow AI. Under-fitting and over-fitting are challenges for Generalization. Under-fitting The trained model is not working well on the training data and can’t generalize to new data. Reasons may be: An idea training process, it would looks like: Under fitting….. better fitting…. Good fit Over-fitting The trained model is working well on the training data and can’t generalize well to new data. Reasons may be: Training dataset (labeled) -> ML Training phase -> Trained Model The input (unlabeled dataset) -> processed by Trained model (inference phase) -> output (labeled dataset) Approaches or learning algorithms of ML systems can be categorized into: Supervised Learning There are two very typical tasks that are performed using supervised learning: Shallow Learning One of the common classification algorithms under the shallow learning category is called Support Vector Machines (SVM). Unsupervised Learning The goal is to identify automatically meaningful patterns in unlabeled data. Semi-supervised…

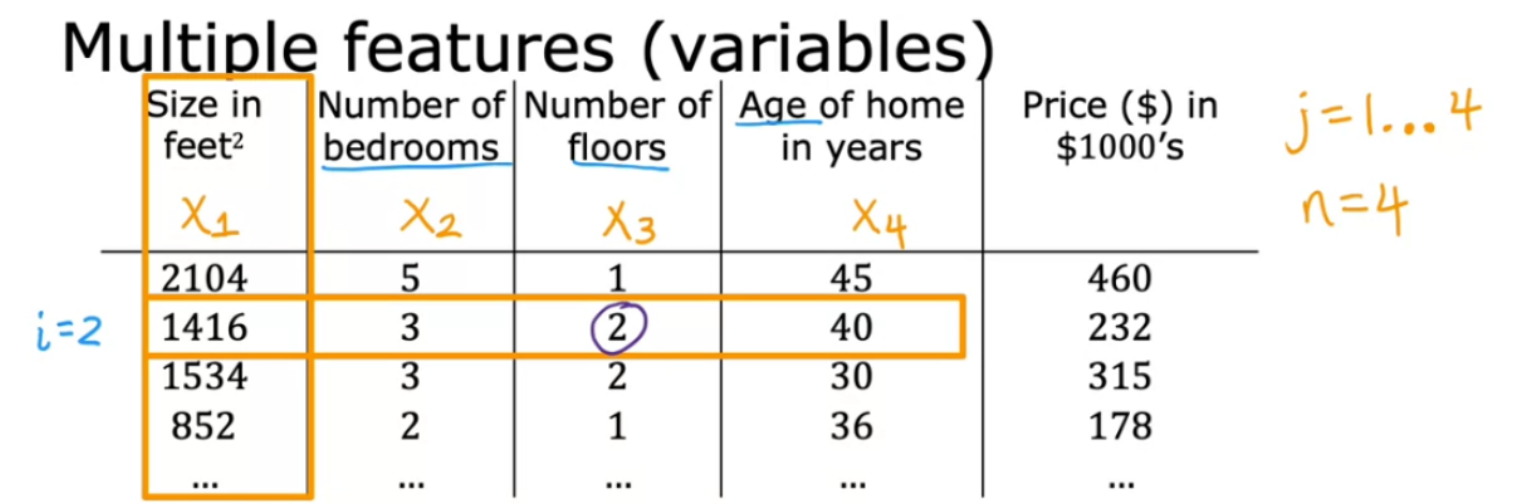

Mastering Multiple Features & Vectorization: Supervised Machine Learning – Day 4 and 5

So difficult to manage some time on this Day 4 was a long day for me, just got 15mins before bed to quickly skim through the video "multiple features" and "vectorization part 1". Day 5, a longer day than yesterday... went to urgent care in morning... then back-to-back meeting after come back.... lunch... back-to-back meeting again... need to step out again... Anyway, that's life. Multiple features (variables) and Vectorization In "multiple features", Andrew uses crispy language explained how to simplify the multiple features formula by using vector and dot product. In "Part 1", Andrew introduced how to use NumPy to do dot product and said GPU is good at this type of calculation. Numpy function can use parallel hardware (like GPU) to make dot product fast. In "Part 2", Andrew further introduced why computer can do dot product fast. He used gradient descent as an example. The lab was informative, I walked through all of them though I've known most of them before. More linkes about Vectorization can be find here. Questions for helping myself learning I created the following questions to test my knowledge later. What is x(4)1 in above graph? Ps. feel free to check out the series of my Supervised Machine Learning journey.

The Beginning As I've been advancing technologies of my AI-powered product knowlege base chatbot which based on Django/LangChain/OpenAI/Chroma/Gradio which is sitting on AI application/framework layer, I also have kept an eye on how to build a pipeline for assessing the accuracy of machine learning models which is a part of AI Devops/infra. But I realized that I have no idea how to meature a model's accuracy. This makes me upset. Then I started looking for answers. My first google search on this is "how to measure llm accuracy", it brought me to Evaluating Large Language Models (LLMs): A Standard Set of Metrics for Accurate Assessment, it's informative. It's not a lengthy article and I read through it. This opens a new world to me. There are standard set of metrics for evaluating LLMs, including: I don't know all of them and where to start! I have to tell meself, "Man, you don't know machine learning..." So my next search was "machine learning course", Andrew Ng's Supervised Machine Learning: Regression and Classification now came on top of the google search results! It's so famous and I knew this before! Then I made a decision, I want to take action now and finish it thoroughly! I immedially enrolled into the course. Now let's start the journey! Day 1 Started Basics 1. What is ML? Defined by Arthur Samuel back in the 1950 😯 "Field of study that gives computers the ability to learn without being explicitly programmed." The above claims gaves the key point (The highlighted part) which could answer the question from…