# Groundbreaking News: OpenAI Unveils o3 and o3 Mini with Stunning ARC-AGI Performance

Table of Contents

On December 20, 2024, OpenAI concluded its 12-day “OpenAI Christmas Gifts” campaign by revealing two groundbreaking models: o3 and o3 mini. At the same time, the ARC Prize organization announced OpenAI’s remarkable performance on the ARC-AGI benchmark. The o3 system scored a breakthrough 75.7% on the Semi-Private Evaluation Set, with a staggering 87.5% in high-compute mode (using 172x compute resources). This achievement marks an unprecedented leap in AI’s ability to adapt to novel tasks, setting a new milestone in generative AI development.

The o3 Series: From Innovation to Breakthrough

OpenAI CEO Sam Altman had hinted that this release would feature “big updates” and some “stocking stuffers.” The o3 series clearly falls into the former category. Both o3 and o3 mini represent a pioneering step towards 2025, showcasing exceptional reasoning capabilities and redefining the possibilities of AI systems.

ARC-AGI Performance: A Milestone Achievement for o3

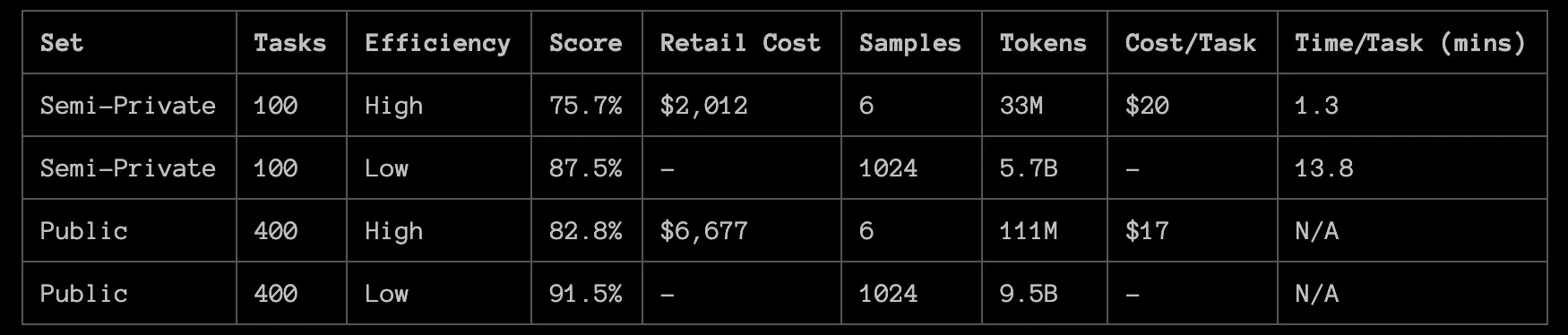

The o3 system demonstrated its capabilities on the ARC-AGI benchmark, achieving 75.7% in efficient mode and 87.5% in high-compute mode. These scores represent a major leap in AI’s ability to generalize and adapt to novel tasks, far surpassing previous generative AI models.

[caption id=“attachment_4806” align=“alignnone” width=“1750”] From https://arcprize.org/blog/oai-o3-pub-breakthrough\[/caption\]

From https://arcprize.org/blog/oai-o3-pub-breakthrough\[/caption\]

What is ARC-AGI?

ARC-AGI (AI Readiness Challenge for Artificial General Intelligence) is a benchmark specifically designed to test AI’s adaptability and generalization. Its tasks are uniquely crafted:

- Simple for humans: Tasks like logical reasoning and problem-solving.

- Challenging for AI: Especially when models haven’t been explicitly trained on similar data.

o3’s performance highlights a significant improvement in tackling new tasks, with its high-compute configuration setting a new standard at 87.5%.

How o3 Outshines Traditional LLMs: From Memory to Program Synthesis

Traditional GPT models rely on “memorization”: learning and executing predefined programs based on massive training data. However, this approach struggles with novel tasks due to its inability to dynamically recombine knowledge or generate new “programs.”

o3’s Core Innovation: Dynamic Knowledge Recombination

- Program Search and Execution o3 generates natural language “programs” (such as Chains of Thought, CoT) to solve tasks and executes them internally.

- Evaluation and Refinement Using techniques similar to Monte-Carlo Tree Search (MCTS), o3 dynamically evaluates program paths and selects optimal solutions.

While this process is compute-intensive (requiring millions of tokens and significant costs per task), it dramatically enhances AI’s adaptability to new challenges.

Efficiency vs. Cost: Balancing o3’s Performance

Despite its remarkable performance, o3’s high-compute mode comes with significant costs. According to ARC Prize data:

- Efficient mode:

- Cost per task: ~$20

- Semi-Private Eval score: 75.7%

- High-compute mode:

- Uses 172x resources of efficient mode.

- Achieves 87.5%, but with a much higher cost.

While the current cost-performance ratio remains a challenge, advancements in optimization and hardware are expected to reduce costs in the coming months.

What Makes o3 a Groundbreaking Leap?

1. Task Adaptability

o3 dynamically generates and executes task-specific natural language programs, moving beyond the static “memorization” paradigm of previous generative AI models.

2. Generalization

Compared to the GPT series, o3 demonstrates near-human generalization capabilities, especially on benchmarks like ARC-AGI.

3. Architectural Innovation

o3’s success underscores the critical role of architecture in advancing AI capabilities. Simply scaling GPT-4 or similar models would not achieve comparable results.

Is o3 AGI?

While o3’s performance is extraordinary, it has not yet reached the level of Artificial General Intelligence (AGI). Key limitations include:

- Failures on Simple Tasks Even in high-compute mode, o3 struggles with some straightforward tasks, revealing gaps in fundamental reasoning.

- Challenges with ARC-AGI-2 Preliminary tests suggest that o3 might score below 30% on the upcoming ARC-AGI-2 benchmark, while average humans score over 95%.

These challenges highlight that while o3 is a significant milestone, it remains a step on the path to true AGI.

Looking Ahead: The Future of o3 and AGI

1. Open-Source Collaboration

The ARC Prize initiative plans to launch the more challenging ARC-AGI-2 benchmark in 2025, encouraging researchers to build on o3’s success through open-source analysis and optimization.

2. Expanding Capabilities

Further analysis of o3 will help identify its mechanisms, performance bottlenecks, and potential for future advancements.

3. Advancing Benchmarks

The ARC Prize Foundation is developing third-generation benchmarks to push the boundaries of AI systems’ adaptability and generalization.

Conclusion: The Significance of o3

OpenAI’s o3 model represents a groundbreaking leap in generative AI, pushing the boundaries of task adaptability and dynamic knowledge recombination. By overcoming the limitations of traditional LLMs, o3 opens new avenues for addressing novel challenges.

This is only the beginning. With new benchmarks and collaborative research on the horizon, o3 sets the stage for further progress towards AGI. As we look ahead to 2025, the future of AI promises even greater possibilities.

Disclaimer: The content above includes contributions generated with the assistance of AI tools.